Understanding how to detect factual errors with ai tools is essential in ensuring the reliability of generated content. As artificial intelligence becomes increasingly integrated into content creation, identifying inaccuracies is vital to maintain trust and credibility. This guide explores practical methods and best practices for leveraging AI effectively to spot and correct factual errors, thereby enhancing the overall quality of information dissemination.

By examining the common types of inaccuracies, visual indicators, and verification techniques, readers will gain valuable insights into maintaining factual integrity. Combining automated tools with human review processes offers a comprehensive approach to minimizing misinformation and improving content accuracy across various applications.

Understanding Factual Errors in AI-Generated Content

As artificial intelligence tools become increasingly integrated into content creation and information dissemination, recognizing the accuracy of AI-generated outputs is vital. Factual errors—incorrect or misleading information presented as truth—pose significant challenges to the reliability of AI-driven content. Identifying and understanding these errors is essential for users aiming to maintain credibility and ensure the integrity of information shared across various platforms.

Factual errors in AI-generated content refer to inaccuracies or false statements that can undermine trust, misinform audiences, and potentially lead to misunderstandings or incorrect decision-making. These errors often stem from limitations in the AI models, which may lack up-to-date data, contextual understanding, or the capacity to verify facts independently. Consequently, understanding the common types of inaccuracies and the scenarios in which they occur enables users to apply more effective review strategies and improve overall content quality.

Types of Factual Errors in AI-Generated Content

Factual inaccuracies generated by AI tools can take various forms, each impacting the reliability of the content differently. Recognizing these types helps in systematically evaluating the correctness of AI outputs.

- Incorrect Data or Statistics: AI models may produce outdated or false numerical information, especially when their training data is not current or lacks verification. For example, citing a population figure from five years ago as current can distort understanding.

- Misrepresented Historical Events: Inaccuracies may involve the incorrect sequencing of events, misattribution of actions, or fabricated details, which can distort historical understanding or context.

- Factual Inconsistencies in Scientific or Technical Information: AI-generated content may contain inaccuracies in scientific data, technical specifications, or medical facts, often due to reliance on unreliable sources or misinterpretation of complex data.

- Incorrect Geographical or Cultural References: Misplaced or wrong references to places, cultures, or languages can lead to misinformation, especially in articles involving regional details or cultural nuances.

Scenarios Prone to Factual Errors

Understanding the contexts where AI-generated content is most susceptible to inaccuracies allows users to be more vigilant during review processes. These scenarios often involve complex or dynamic information that requires up-to-date verification.

- Rapidly Evolving Fields: Areas such as technology, medicine, or current events are constantly changing. AI models trained on static datasets may lack recent developments, leading to outdated or incorrect statements.

- Limited or Biased Training Data: When AI models are trained on a narrow set of sources, especially those containing inaccuracies or biases, they are more likely to produce flawed content.

- Ambiguous or Vague Prompts: Vague queries can lead AI tools to generate generalizations or assumptions that are not factually grounded, increasing the risk of inaccuracies.

- Complex or Nuanced Topics: Subjects requiring deep contextual understanding, such as legal interpretations or cultural sensitivities, can be misrepresented by AI, resulting in errors.

By understanding these common types of factual errors and the scenarios in which they arise, users can more effectively scrutinize AI-generated content, cross-reference information, and maintain a high standard of accuracy in their informational outputs.

Identifying Indicators of Factual Errors

Recognizing potential inaccuracies in AI-generated content is essential for maintaining the integrity of information and making informed decisions. Since AI tools can sometimes produce plausible yet incorrect statements, developing criteria to identify these factual errors helps users evaluate content critically and effectively.

Distinguishing between factual information and fabricated or erroneous statements involves thorough analysis and awareness of common indicators of inaccuracies. Implementing systematic methods can greatly improve the accuracy of content validation, ensuring that reliance on AI outputs does not compromise factual correctness.

Criteria for Recognizing Potential Inaccuracies

Several key indicators can signal the presence of factual errors within AI-produced content. Recognizing these signs enables users to scrutinize information more effectively and identify statements that warrant further verification.

- Contradictions or inconsistencies: Repeated or conflicting statements within the same content or compared with prior knowledge can indicate errors.

- Unusual or improbable claims: Statements that seem extraordinary, unlikely, or defy established facts should be approached with skepticism.

- Incorrect dates or timelines: Historical dates, chronological sequences, or event timelines that do not align with verified records often point to inaccuracies.

- Misused terminology or technical inaccuracies: Inappropriate or incorrect use of technical terms and concepts often signal a lack of factual precision.

- Lack of credible sources or references: Absence of verifiable citations or references for specific claims suggests potential inaccuracies.

Methods for Distinguishing Between Factual and Fabricated Information

Effective verification methods are critical in differentiating factual statements from fabricated or misleading content generated by AI systems. Employing a combination of strategies enhances confidence in the accuracy of the information.

- Cross-referencing with authoritative sources: Comparing AI-generated statements with reputable databases, scholarly articles, or official publications helps confirm accuracy.

- Utilizing fact-checking tools: Leveraging dedicated fact-checking platforms and AI-powered verification tools can quickly identify inaccuracies or fabricated claims.

- Analyzing the context and plausibility: Evaluating whether the information aligns logically within the given context and matches real-world knowledge.

- Checking for specificity and detail: Factual statements tend to include precise data, dates, and references, whereas fabricated content may lack specificity.

- Assessing source transparency: Genuine information often cites credible sources or provides verifiable references, unlike fabricated content that may omit source details.

Visual Examples of Correct Versus False Statements

Below is a responsive table illustrating examples of accurate versus false statements generated by AI. Recognizing these differences assists users in quickly assessing the reliability of the content.

| Correct Statement | False Statement |

|---|---|

|

Example: The Great Wall of China stretches over 13,000 miles and was primarily built during the Ming Dynasty (1368–1644). This statement aligns with historical records indicating the wall’s extensive length and the major construction period during the Ming Dynasty. |

Example: The Great Wall of China is exactly 8,000 miles long and was constructed entirely during the Tang Dynasty (618–907). This statement contains inaccuracies regarding both the length and the construction era, which do not match verified historical data. |

|

Example: Water boils at 100°C under standard atmospheric pressure at sea level. This is a scientifically accurate statement based on well-established physical laws. |

Example: Water boils at 90°C at standard atmospheric pressure at sea level. This is incorrect, as boiling point varies with pressure, but at sea level, it is approximately 100°C. |

|

Example: Albert Einstein developed the theory of relativity, published in 1915. This statement accurately reflects historical facts about Einstein’s contributions and publication date. |

Example: Albert Einstein developed the theory of relativity in 1920. This is inaccurate; the special theory was published in 1905, and the general theory in 1915. |

Techniques for Verifying Facts with AI Tools

Ensuring the accuracy of AI-generated information is critical in maintaining credibility and trustworthiness. The process involves systematic steps to cross-reference data with reputable sources, employing both manual procedures and automated tools. This segment will explore effective methodologies for verifying facts, creating validation checklists, and utilizing AI-powered features to detect inconsistencies efficiently.Accurate fact verification combines traditional research practices with modern AI capabilities.

It requires a structured approach to compare outputs against authoritative references, leveraging AI tools that automate parts of this process. These techniques enhance the reliability of AI-generated content, reduce misinformation, and support responsible information dissemination.

Step-by-step Procedures to Cross-Reference AI-Generated Data with Credible Sources

Establishing a rigorous verification workflow involves multiple stages, each designed to minimize errors and ensure data authenticity. The following procedure provides a comprehensive guide:

- Identify Key Data Points: Extract specific facts from the AI output, such as dates, figures, names, or events. Highlight these data points for targeted verification.

- Determine Credible Sources: Select reputable references, including academic journals, official publications, government databases, and established news outlets, to serve as verification benchmarks.

- Perform Manual Cross-Checking: Search the identified data points in selected credible sources. Use official websites, digital archives, or bibliographic databases to confirm accuracy.

- Utilize AI Search and Verification Tools: Employ AI-powered search engines or verification platforms that can quickly compare facts against multiple sources. These tools often include features that highlight discrepancies or inconsistencies.

- Document Discrepancies and Confirmations: Record findings, noting where the AI-generated data aligns or diverges from authoritative sources. Maintain a log for future reference and quality assessment.

- Update and Correct AI Outputs: Based on verification results, update the AI-generated content to reflect accurate information, correcting any errors identified during the process.

Checklists for Validating Specific Types of Information

Creating standardized checklists facilitates the validation process for different categories of data, ensuring thoroughness and consistency. Below are tailored checklists for common information types:

Validating Dates:

- Confirm the date in official records or authoritative websites.

- Cross-reference multiple sources to verify the event or publication date.

- Ensure the date format aligns with regional standards for clarity.

- Check for updates or corrections issued by original sources.

Validating Statistics:

- Verify the statistical data against the original dataset or official reports.

- Check the methodology used to collect the data for consistency.

- Compare figures with recent publications or updates from reputable statistical agencies.

- Assess the context of statistics to avoid misinterpretation or misrepresentation.

Validating Historical Events:

- Consult established historical records or academic publications.

- Use multiple sources to confirm the date, location, and significance of the event.

- Verify the credibility of sources to avoid biased or outdated information.

- Assess timelines to ensure chronological accuracy.

Using AI Tools to Automatically Flag Inconsistent Data

AI tools equipped with natural language processing and data validation capabilities can significantly streamline fact-checking processes by automatically identifying inconsistencies. Here’s how to leverage these features:

- Integrate Data Validation Features: Use AI platforms that include fact-checking modules designed to scan and compare AI-generated content with verified databases and sources.

- Configure Detection Parameters: Set thresholds for acceptable variances in data, such as numerical tolerances for statistics or date ranges, to refine automatic flagging accuracy.

- Implement Continuous Monitoring: Enable real-time analysis where AI tools monitor outputs and alert users when discrepancies are detected, allowing prompt review.

- Analyze Flagged Data: Review flagged items carefully, cross-referencing with credible sources to determine whether the inconsistency is a false positive or a genuine error.

- Refine Algorithms: Use feedback from manual reviews to improve the AI’s detection algorithms, reducing false positives and enhancing overall accuracy.

For example, an AI tool might automatically flag a statistical claim about global internet usage that deviates significantly from the latest reports from international telecommunications agencies, prompting further investigation and correction.

Leveraging AI for Error Detection

In the era of large-scale data and complex AI systems, leveraging AI itself to identify factual discrepancies has become an essential strategy. Utilizing advanced algorithms enables organizations to efficiently scrutinize vast datasets, pinpoint inconsistencies, and uphold data integrity. Integrating intelligent error detection mechanisms into AI workflows allows for continuous monitoring and real-time correction, significantly enhancing the reliability of AI-generated outputs.

Developing custom explanations further empowers stakeholders to comprehend the AI’s decision-making process, fostering transparency and trust.

By harnessing AI for error detection, organizations can automate the process of fact verification, reduce manual oversight, and expedite the identification of inaccuracies. This proactive approach ensures that AI systems not only generate insights but also maintain high standards of factual accuracy, which is crucial across domains such as healthcare, finance, and journalism.

Strategies for Utilizing AI Algorithms in Discrepancy Detection

To effectively detect discrepancies within large datasets, it is vital to implement sophisticated AI strategies that can analyze patterns, identify anomalies, and flag potential errors. These strategies include:

- Machine Learning-Based Anomaly Detection: Deploy algorithms trained on historical data to recognize normal patterns, thereby enabling the identification of unusual data points that may indicate errors or inconsistencies. For instance, in financial datasets, sudden spikes or drops in transaction amounts can be flagged for further review.

- Natural Language Processing (NLP) for Text Validation: Use NLP techniques to analyze textual data, identify conflicting statements, and verify facts against authoritative sources. This is particularly useful in fact-checking news articles or scientific publications.

- Pattern Recognition and Clustering: Implement clustering algorithms to group similar data points and detect outliers that do not conform to established patterns. This helps in uncovering data entry errors or fraudulent activities.

Integration of Fact-Checking Modules into AI Workflows

Embedding dedicated fact-checking modules within AI systems streamlines the verification process and enhances accuracy. This integration involves several key components:

- Real-Time Fact Validation: Incorporate APIs that access trusted knowledge bases, such as encyclopedic databases or official statistics, to cross-verify statements dynamically as AI generates content.

- Automated Cross-Referencing: Use algorithms that automatically compare AI outputs against multiple verified sources, highlighting discrepancies for human review or flagging them for automatic correction.

- Feedback Loops for Continuous Improvement: Establish mechanisms where corrections are fed back into the system, allowing the AI to learn from past errors and refine its fact-checking capabilities over time.

Development of Custom Explainings for AI-Driven Fact Evaluation

Creating tailored explanation modules enhances the interpretability of AI’s fact evaluation process. These modules clarify how the system arrived at a particular conclusion, which is vital for building trust and ensuring accountability. Developing custom explainings involves:

- Rule-Based Reasoning Frameworks: Implement explainability methods that Artikel the logical steps the AI took to verify a fact, such as referencing specific data points or authoritative sources.

- Visualization of Data Correlations: Generate visual explanations, like graphs or heatmaps, illustrating the relationships between data elements and how they support or contradict certain statements.

- Transparent Confidence Scoring: Provide clear, quantitative confidence scores with explanations about the factors influencing the AI’s certainty, enabling users to assess the reliability of the verification.

By developing these custom explainings, organizations can demystify AI decision-making, facilitate better human-AI collaboration, and ensure that fact-checking processes are both rigorous and comprehensible.

Best Practices for Minimizing Factual Errors

Implementing effective strategies to minimize factual inaccuracies in AI-generated content is vital for ensuring reliability and trustworthiness. These best practices guide creators and developers in establishing robust workflows that prioritize accuracy and reduce misinformation. By adhering to proven procedures, organizations can enhance the credibility of their outputs and foster informed decision-making.

Establishing a disciplined approach involves a combination of careful content design, iterative refinements, and rigorous validation protocols. These practices focus on reducing errors from the initial stages of content creation through to final verification before publication. Consistent application of these guidelines ensures that AI-assisted content maintains high standards of factual correctness, thereby supporting ethical and responsible information dissemination.

Guidelines for Creating Explainings that Reduce Misinformation

Developing clear and factual explanations requires precise language, comprehensive sourcing, and logical coherence. It is essential to start with well-defined objectives, ensuring that the content clearly addresses the intended topic without ambiguity. Incorporate authoritative sources and cross-reference information to avoid incorporating outdated or incorrect data.

- Use reputable, verified sources such as peer-reviewed journals, government publications, and recognized industry reports.

- Maintain transparency about the sources of information to enable easy verification and validation.

- Write explanations in a structured manner, highlighting key points and supporting claims with concrete evidence.

- Include contextual background to help clarify complex topics and prevent misinterpretation.

- Regularly update content to reflect the latest data and developments in the relevant field.

Procedures to Enhance AI Outputs’ Factual Accuracy through Iterative Refinement

Iterative refinement involves continuously reviewing and improving AI-generated content to identify and correct inaccuracies. This process helps detect subtle errors and enhances the overall quality of the output by integrating feedback loops and human oversight.

- Generate initial content using AI tools, ensuring that prompts are clear and specific to reduce ambiguity.

- Conduct a preliminary review by subject matter experts to identify potential factual inaccuracies or ambiguities.

- Refine the prompts or input parameters based on feedback, aiming to clarify or specify the information further.

- Re-generate the content with the updated prompts and review again, focusing on areas flagged as problematic.

- Repeat this cycle until the output meets the desired level of factual reliability.

Implementing structured review and refinement loops creates a robust safeguard against misinformation, especially when combined with expert oversight and verification tools.

Validation Protocols Before Publishing AI-Assisted Content

Before releasing AI-generated content, it is essential to verify its factual correctness through formal validation protocols. These procedures help prevent the dissemination of misinformation and ensure that the content aligns with established facts and standards.

- Cross-verify key facts against multiple authoritative sources to confirm accuracy.

- Employ fact-checking tools and AI validation platforms that specialize in detecting inaccuracies and inconsistencies.

- Consult domain experts to review content for technical accuracy and contextual integrity.

- Use checklists that include verification of data sources, date accuracy, and referencing consistency.

- Document validation steps and outcomes to maintain accountability and facilitate future audits.

Adhering to these validation protocols fosters confidence in AI-assisted content, minimizes the risk of spreading false information, and upholds the integrity of published material.

Limitations of AI Tools in Fact-Checking

While AI tools have significantly advanced the process of identifying and correcting factual inaccuracies, they are not without their inherent limitations. Recognizing these challenges is essential for effectively integrating AI into fact-checking workflows and ensuring content accuracy. AI-based error detection systems operate within certain constraints that can lead to misinterpretations or overlooked errors, emphasizing the importance of human oversight in the fact-checking process.

Understanding the limitations of AI tools involves examining their technical, contextual, and bias-related challenges. These limitations highlight the need for a balanced approach that combines automated capabilities with human judgment to maintain high standards of factual integrity.

Inherent Challenges and Potential Biases in AI-Based Error Detection

AI models rely heavily on the data they are trained on, which introduces several intrinsic challenges. One primary concern is the presence of biases in training datasets, which can lead to skewed or incomplete fact-checking outcomes. For instance, if an AI system is trained predominantly on sources from a specific geographic region or ideological perspective, it may inadvertently favor certain viewpoints, thereby missing errors or misclassifying facts from underrepresented perspectives.

Furthermore, AI tools often struggle with understanding nuance, context, and the subtleties of language. They can misinterpret idiomatic expressions, sarcasm, or cultural references, leading to false positives or negatives. For example, a statement containing irony might be flagged as false, whereas it is actually a rhetorical device meant to convey humor or critique.

“AI systems are only as good as the data they learn from. Biases, gaps, and inaccuracies in training data directly impact their performance in fact-checking.”

Situations Where AI May Misidentify or Overlook Errors

Despite their sophistication, AI tools can sometimes misidentify or completely overlook factual errors due to limitations in their design or data. For example, an AI may incorrectly flag a statement as false because it relies on outdated information, failing to recognize recent corrections or updates. Conversely, it might overlook nuanced inaccuracies that require contextual understanding, such as subtle misrepresentations in complex scientific or legal content.

Another situation involves ambiguous language or incomplete information, which can confuse AI algorithms. For instance, if a news article contains a partially accurate statement with some minor inaccuracies, the AI may either dismiss the errors or incorrectly flag the entire statement as false. Additionally, AI systems might struggle with recognizing emerging facts or events that are not yet documented in their knowledge bases, leading to missed errors in rapidly evolving scenarios.

Methods to Supplement AI Tools with Human Review Processes

To mitigate the limitations of AI-based fact-checking, incorporating human review is essential. Human experts bring contextual knowledge, cultural sensitivity, and critical thinking skills that AI systems currently cannot replicate fully. An effective approach involves using AI to flag potential errors or inconsistencies, which are then scrutinized and verified by trained fact-checkers.

Implementing a layered review process enhances accuracy and accountability. For example, AI can serve as an initial filter to identify suspicious statements, followed by detailed human analysis to confirm or disprove these findings. This hybrid approach ensures that errors stemming from AI limitations are caught before publication or dissemination.

Training human reviewers to understand the strengths and weaknesses of AI outputs further improves overall fact-checking efficacy. Regular calibration sessions, combined with ongoing AI system updates based on human feedback, create a robust ecosystem where technology and human judgment complement each other effectively.

Case Studies and Practical Applications

Real-world implementations of AI tools in identifying and correcting factual errors demonstrate their practical value and limitations. Analyzing these case studies provides insights into effective strategies, prevalent challenges, and the comparative effectiveness of different AI solutions in maintaining content accuracy across various industries and contexts.

These case studies highlight how organizations leverage AI for fact-checking, the techniques employed, and the outcomes achieved, offering a comprehensive understanding of AI’s role in enhancing informational integrity in diverse applications.

Successful Factual Error Detection in Real-World Projects

Numerous organizations have adopted AI-driven fact-checking systems to improve the accuracy of published content, especially in journalism, scientific publishing, and legal information dissemination. Notable examples include news agencies utilizing AI to verify sources rapidly, and academic publishers deploying AI tools to detect discrepancies between reported data and original research findings.

For instance, a leading fact-checking organization integrated an AI platform that cross-referenced claims made in news articles with authoritative databases such as government records and scientific repositories. This system flagged potential inaccuracies, allowing human reviewers to focus on questionable content, significantly reducing error detection time and increasing reliability.

Similarly, in the pharmaceutical industry, AI tools analyze clinical trial reports and published research to identify discrepancies or outdated information, thus preventing the dissemination of incorrect data about drug efficacy or safety.

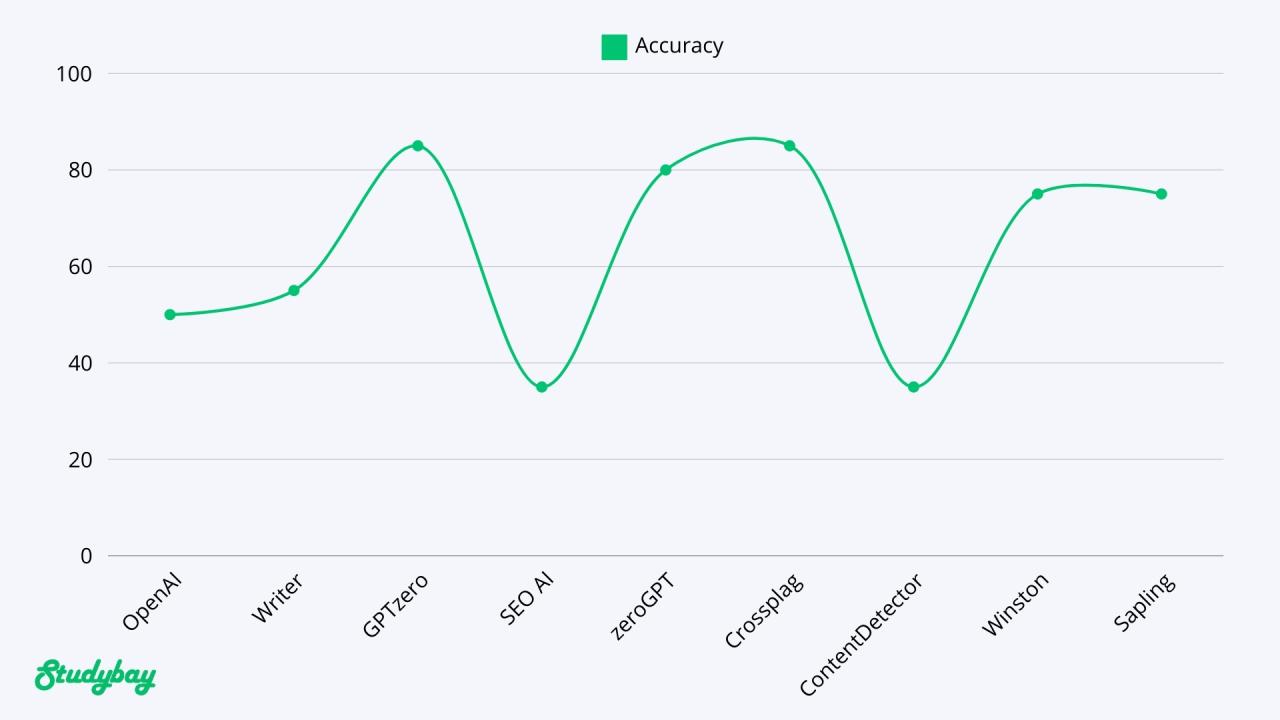

Comparison of AI Tools’ Effectiveness in Identifying Inaccuracies

Different AI tools employ varied algorithms, data sources, and verification techniques. Understanding their comparative effectiveness helps organizations select suitable solutions for their specific needs.

Effectiveness is often measured by precision, recall, and the ability to detect subtle or context-dependent errors.

The following table summarizes some of the most prominent AI tools used in factual error detection, their techniques, and observed outcomes:

| AI Tool | Technique | Application Context | Effectiveness | Outcome Summary |

|---|---|---|---|---|

| ClaimBuster | Natural Language Processing (NLP) + Database Cross-Referencing | News Fact-Checking | High precision in verifying political claims | Reduced false claims published; increased public trust |

| Full Fact AI | Machine Learning + Semantic Analysis | Journalism and Media Verification | Moderate recall, especially in complex statements | Improved detection of subtle inaccuracies, though some false negatives persist |

| ClaimCheck | Neural Networks + Data Validation Algorithms | Scientific Literature | High accuracy in verifying data consistency | Enhanced speed of fact-checking, with some limitations on very recent data |

| Google Fact Check Tools | Automated Content Analysis + External Database Linking | General Content Verification | Variable effectiveness depending on data source quality | Effective for well-documented facts; less so for emerging or less-covered topics |

These case studies underscore that while AI tools can significantly streamline the verification process, their effectiveness varies depending on the context, data quality, and complexity of the content. Combining AI with human oversight remains critical for maximizing accuracy.

Future Trends in AI-Driven Fact Validation

The landscape of AI-assisted fact validation is rapidly evolving, driven by technological innovations and an increasing demand for accurate information dissemination. As AI tools become more sophisticated, their capacity to identify, verify, and rectify factual errors will expand significantly. This ongoing progression promises to enhance the reliability of AI-generated content and establish new standards for fact-checking methodologies across various industries.

Emerging technologies and methodologies are poised to shape the future of AI-driven fact validation, emphasizing precision, transparency, and ethical deployment. These advancements will not only improve error detection capabilities but also influence the roles AI systems play in ensuring information integrity while raising important considerations about responsible use and ethical boundaries in deploying these powerful tools.

Emerging Technologies and Methodologies for Improving Factual Accuracy

The quest for higher factual accuracy in AI-generated content is spurring innovation through novel technologies and innovative approaches. These include:

- Hybrid Human-AI Systems: Combining human expertise with AI automation to leverage the strengths of both. Such systems enable AI models to flag potential errors for human review, ensuring nuanced judgment and context-aware verification.

- Explainable AI (XAI): Developing models that can articulate how they arrive at specific conclusions about factual correctness. Transparency in AI reasoning builds trust and facilitates targeted error correction strategies.

- Enhanced Data Curation and Knowledge Bases: Building comprehensive, regularly updated knowledge repositories that AI systems can consult for fact-verification, reducing reliance on outdated or incorrect data sources.

- Natural Language Processing (NLP) Advancements: Utilizing cutting-edge NLP techniques to better understand context, detect subtle inaccuracies, and discern the intent behind statements, thereby improving factual validation accuracy.

- Integration of Multi-Modal Data: Combining textual data with images, videos, and structured data to cross-validate facts, providing a multi-layered approach to error detection.

Projections on AI Evolution in Error Detection Roles

Projections suggest that AI systems will become increasingly autonomous and precise in error detection over the coming decades. The trajectory indicates a shift from reactive fact-checking—identifying errors after publication—to proactive validation that prevents misinformation from spreading. This evolution will involve:

- Real-Time Verification: AI tools capable of verifying facts instantaneously during content creation, enabling writers and publishers to correct inaccuracies before dissemination.

- Context-Aware Error Detection: Systems that understand nuanced contexts and recognize subtle discrepancies, such as historical inaccuracies or scientific misstatements.

- Adaptive Learning Models: AI that continuously learns from new data, user feedback, and correction patterns to refine its accuracy and reduce false positives/negatives.

- Cross-Platform Validation: AI frameworks that can aggregate and verify information across multiple sources and platforms, providing a holistic and corroborated view of facts.

Real-world examples anticipate AI systems assisting journalists in fact-checking during live reporting or enabling search engines to prioritize verified information, thereby improving public access to trustworthy content.

Ethical Considerations and Responsibilities in Deploying AI for Fact-Checking

The deployment of AI in fact validation raises critical ethical questions that must be addressed to ensure responsible use. These include:

- Bias and Fairness: Ensuring AI models do not perpetuate biases present in training data, which could lead to unfair or skewed fact verification outcomes. Developers should prioritize diverse and representative datasets.

- Transparency and Accountability: Making AI decision-making processes understandable and establishing accountability frameworks for errors or misjudgments, particularly when AI systems influence public opinion or policy.

- Privacy and Data Security: Protecting sensitive information involved in verification processes, especially when handling classified or personal data, to prevent misuse or breaches.

- Preventing Misuse: Safeguarding AI tools from being exploited for malicious purposes, such as spreading disinformation or manipulating public discourse.

- Maintaining Human Oversight: Recognizing that AI should augment, not replace, human judgment, particularly in complex or high-stakes contexts where ethical implications are profound.

Incorporating ethical principles into AI development and deployment ensures that fact validation tools contribute positively to information ecosystems, fostering trust, fairness, and accountability.

Final Thoughts

In summary, mastering how to detect factual errors with ai tools empowers content creators and reviewers to uphold high standards of accuracy. Employing a combination of verification strategies, leveraging AI capabilities, and recognizing the limitations of these tools ensures a balanced approach to fact-checking. As technology continues to evolve, staying informed about emerging trends and best practices will be crucial in maintaining the integrity of AI-generated content in the future.