Exploring how to review abstracts quickly with ai reveals a transformative approach to streamlining research evaluation processes. As the volume of academic and professional submissions continues to grow, traditional manual review methods often become time-consuming and labor-intensive. Integrating artificial intelligence tools offers a promising solution to enhance efficiency while maintaining accuracy, enabling reviewers to assess large numbers of abstracts swiftly and effectively.

This guide delves into the techniques for harnessing AI technologies, selecting suitable tools, setting up systems, and interpreting outputs to optimize the abstract review process. By adopting these methods, organizations and researchers can significantly reduce review time, improve consistency, and focus human expertise where it is most needed.

Overview of AI in Abstract Review Processes

Artificial intelligence (AI) has revolutionized numerous aspects of academic and professional research workflows, particularly in the review and assessment of research abstracts. By leveraging advanced algorithms and machine learning models, AI facilitates a more efficient and accurate filtering process, enabling reviewers to focus on the most promising submissions. The integration of AI tools into abstract review processes not only accelerates the evaluation timeline but also enhances consistency and objectivity across assessments.

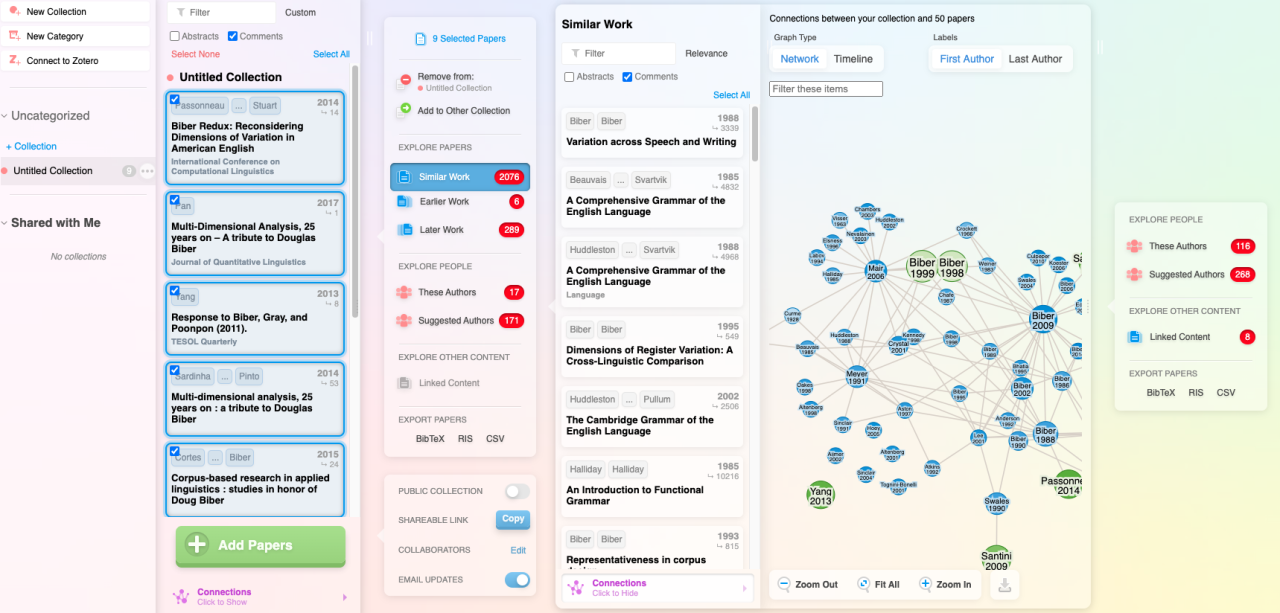

Current AI technologies employed in abstract analysis encompass a variety of approaches, including natural language processing (NLP), machine learning classifiers, and semantic analysis. These tools are capable of understanding the context, identifying key themes, and evaluating the relevance of abstracts relative to specific criteria or research domains. In academic settings, platforms such as Elsevier’s SciVal and Clarivate’s EndNote incorporate AI features to assist in sorting and prioritizing submissions.

Similarly, professional organizations increasingly adopt AI-driven solutions to manage large volumes of abstracts efficiently, ensuring a thorough yet expeditious review process.

AI Technologies in Academic and Professional Abstract Analysis

Various AI technologies have been developed and refined to optimize the abstract review process, making them invaluable for managing the ever-growing volume of research submissions worldwide. These technologies include:

| Technology | Functionality | Examples and Applications |

|---|---|---|

| Natural Language Processing (NLP) | Analyzes textual content to extract meaning, identify s, and understand context. | Semantic similarity assessments, topic modeling, and extraction to categorize abstracts efficiently. |

| Machine Learning Classifiers | Learn from labeled data to distinguish relevant abstracts from irrelevant ones based on predefined criteria. | Spam filtering, relevance ranking, and quality scoring in conference submissions and journal reviews. |

| Semantic Analysis Tools | Assess the meaning and relationships within the text to identify core themes and research significance. | Automated tagging of abstracts according to research domain, methodology, or impact level. |

| Automated Summarization Algorithms | Generate concise summaries of abstracts to facilitate quick understanding. | Providing reviewers with quick overviews, especially useful for large-scale review processes. |

“Integrating AI tools into abstract review workflows enhances speed, consistency, and objectivity, allowing researchers and reviewers to focus on the substantive quality of research rather than administrative tasks.”

Techniques for Utilizing AI to Speed Up Abstract Evaluation

Employing AI in the abstract review process can substantially reduce the time required for initial screening while maintaining or improving accuracy. Implementing systematic methods for inputting abstracts and leveraging AI-generated insights enables reviewers to focus their efforts on the most promising submissions. This section details practical steps to integrate AI effectively into abstract evaluation workflows, ensuring efficiency without compromising quality.

By following structured techniques for input and analysis, conference organizers, journal editors, and research committees can streamline their review procedures. These methods include preparing abstracts for AI processing, utilizing AI-generated summaries or s for quick relevance assessments, and organizing comparative workflows that highlight the efficiency gains over traditional manual review processes.

Step-by-Step Methods for Inputting Abstracts into AI Systems for Quick Initial Screening

Efficiently feeding abstracts into AI tools is crucial for rapid evaluation. The process begins with standardizing the formatting of abstracts to ensure consistency and compatibility across AI platforms. Typically, abstracts should be compiled into a structured database or spreadsheet, where each entry includes fields such as title, authors, s, and the abstract text itself.

- Preprocess the data by removing extraneous formatting, special characters, and irrelevant metadata to improve AI comprehension.

- Convert abstracts into plain text or compatible digital formats (e.g., CSV, JSON) that the AI system can parse effectively.

- Input abstracts individually or in batches, depending on the system’s capacity, using either API integrations or upload features provided by the AI platform.

- Ensure proper labeling or tagging of abstracts with relevant categories, topics, or s to facilitate focused analysis.

Once abstracts are uploaded, AI models can analyze the text using Natural Language Processing (NLP) techniques to generate summaries, extract s, and assess thematic relevance. This systematic approach allows for rapid initial filtering based on predefined criteria or relevance scores.

Organizing a Workflow for Leveraging AI-Generated Summaries or s to Assess Relevance

Integrating AI outputs into a coherent workflow enhances the efficiency of abstract screening. The workflow typically involves generating concise summaries or lists that serve as quick-reference indicators of content relevance and quality.

- Run the uploaded abstracts through AI models designed for summarization and extraction, ensuring that the models are trained on domain-specific language for accuracy.

- Review AI-generated summaries and s to identify key themes, methodological approaches, and relevance to conference or journal topics.

- Create relevance thresholds based on the presence of specific s or the quality of summaries, enabling automated or semi-automated filtering.

- Use these indicators to categorize abstracts into tiers such as ‘high relevance,’ ‘moderate relevance,’ and ‘low relevance,’ facilitating targeted manual review.

- Maintain a feedback loop where reviewers can validate or correct AI outputs, gradually improving model performance over time.

This process not only speeds up the initial screening but also ensures that subsequent detailed reviews are focused on the most pertinent abstracts, maximizing resource allocation efficiency.

Comparison Table: Traditional Review vs. AI-Assisted Methods

Understanding the tangible benefits of AI integration can be facilitated through a comparative analysis of traditional and AI-assisted review processes. The table below illustrates the differences across critical metrics such as time, accuracy, and effort involved.

| Aspect | Traditional Review | AI-Assisted Review |

|---|---|---|

| Time | Multiple hours to days for large volumes of abstracts, depending on reviewer availability and workload | Reduced to minutes or hours for initial screening, enabling rapid filtering of thousands of submissions |

| Accuracy | Dependent on reviewers’ expertise; potential for subjective bias and inconsistency | Consistent initial screening based on trained models; supplemented by human validation to ensure reliability |

| Effort | High manual effort; repetitive task for reviewers, risking fatigue and oversight | Minimized manual effort; AI handles bulk processing, allowing reviewers to focus on nuanced evaluations |

“AI accelerates the initial screening phase, reducing human workload while maintaining a high standard of relevance detection through consistent processing.”

Features to Look for in AI Tools for Abstract Review

When selecting AI tools designed for abstract review, it is crucial to focus on features that ensure a comprehensive understanding of scientific language, structure, and thematic content. The right AI software can significantly streamline the evaluation process, enabling reviewers to efficiently identify high-quality submissions and maintain consistency across large datasets. Understanding the key functionalities and criteria for these tools facilitates informed decision-making and enhances the overall review workflow.

Effective AI tools should possess capabilities that accurately interpret complex scientific language, extract relevant information, and provide meaningful categorization and scoring. These functionalities help reviewers quickly assess abstracts’ relevance, novelty, and scientific rigor, ultimately supporting better decision-making and reducing manual workload.

Criteria for Selecting AI Software Capable of Understanding Scientific Language and Structure

Choosing the appropriate AI tool involves considering several essential criteria that ensure the software can handle the nuances of scientific texts. The software should be built on advanced natural language processing (NLP) models trained specifically on scientific literature to accurately interpret technical terminology, complex sentence structures, and domain-specific jargon. Additionally, the tool should demonstrate proficiency in recognizing the typical structure of scientific abstracts, including sections such as objectives, methods, results, and conclusions.

Compatibility with existing review platforms and ease of integration into workflows are also important. The AI should support seamless data transfer, facilitate customization to specific conference or journal requirements, and offer user-friendly interfaces for reviewers with varying technical expertise. Reliability, scalability, and the ability to update models as scientific language evolves are further key considerations.

Key Functionalities of AI Tools for Abstract Review

Desirable features in AI software for abstract review encompass a variety of functionalities that enhance the speed and accuracy of evaluation. These include:

- Extraction: Identifies core terms and concepts within abstracts, aiding quick thematic assessment.

- Thematic Categorization: Classifies abstracts into predefined research areas or topics, streamlining the sorting process.

- Relevance Scoring: Provides an objective measure of how pertinent an abstract is to the conference or journal scope, based on content analysis.

- Language Quality Assessment: Evaluates clarity, grammar, and coherence, which are indicators of overall quality.

- Similarity Analysis: Detects overlaps or potential duplication with existing submissions or past literature.

Incorporating these functionalities allows reviewers to focus their efforts on the most promising abstracts, reducing manual review time while maintaining high standards of quality control.

Features and Functionalities Table

| Feature | Description | Example Applications | Integration Options |

|---|---|---|---|

| Extraction | Automatically identifies and highlights key terms and concepts within abstracts to facilitate thematic analysis. | Filtering abstracts by specific research s such as “machine learning,” “climate change,” or “nanotechnology.” | API integration with review management systems like EasyChair or Ex Ordo for real-time tagging. |

| Thematic Categorization | Classifies abstracts into predefined research domains using machine learning models trained on scientific datasets. | Sorting submissions into categories such as biomedical, engineering, social sciences, enabling targeted reviewer assignment. | Plug-ins for systems like ScholarOne or custom integrations via SDKs for automated categorization. |

| Relevance Scoring | Provides a quantitative score indicating the relevance of each abstract concerning the conference scope. | Prioritizing abstracts with high relevance scores for detailed review to optimize reviewer time. | Integration with review platforms that support custom scoring metrics, via RESTful APIs. |

| Language Quality Assessment | Analyzes the clarity, grammatical correctness, and overall readability of abstracts. | Flagging submissions with language issues for author revision or reviewer attention. | Embedded within manuscript submission portals or as standalone modules compatible with major review tools. |

| Similarity Analysis | Detects potential overlaps or duplicate submissions through semantic comparison. | Identifying plagiarized or highly similar abstracts to maintain originality standards. | Integration with plagiarism detection systems or via custom scripts in review workflows. |

By carefully evaluating these features and functionalities, organizations can select AI tools tailored to their specific review needs, ensuring a more efficient, accurate, and transparent abstract evaluation process.

Setting Up an AI System for Abstract Screening

Implementing an effective AI-driven abstract review system begins with meticulous preparation of datasets and input formats. Proper setup ensures the AI models can process information accurately and deliver reliable screening results. This foundational step is essential for seamless integration into existing review workflows and for maximizing the efficiency gains offered by AI technologies.

Configuring the AI system appropriately involves several critical procedures, including dataset preparation, parameter customization, and the development of standardized input templates. These steps enable the AI to interpret and evaluate abstracts consistently across disciplines and review standards, ultimately leading to faster, more accurate screening processes.

Preparing Datasets and Input Formats for Optimal AI Processing

To leverage AI effectively in abstract screening, the initial task involves curating high-quality datasets. These datasets serve as the training basis for AI models and should encompass a representative sample of abstracts relevant to the specific review domain. Ensuring diversity in the dataset helps the AI learn nuanced distinctions, such as identifying relevant versus irrelevant research and understanding discipline-specific terminology.

When preparing datasets, consider the following guidelines:

- Collect abstracts in structured formats such as CSV, JSON, or XML, which facilitate consistent data ingestion and parsing.

- Ensure each abstract entry includes key metadata fields like title, authors, s, and abstract text to enhance context comprehension.

- Remove duplicates, irrelevant content, or poorly formatted entries that could impair AI learning and evaluation accuracy.

- Normalize text by standardizing character encodings (preferably UTF-8), uniform punctuation, and consistent use of terminology.

Input formats should be compatible with the chosen AI platform or tool. For optimal processing, use standardized data schemas and ensure that the abstracts are free from formatting errors or extraneous symbols, which can interfere with parsing and analysis.

Organizing Guidelines for Customizing AI Parameters

Customizing AI parameters is pivotal to aligning the system with specific review standards and disciplinary nuances. Proper parameter tuning enhances the AI’s ability to discriminate relevant abstracts and adhere to review criteria, resulting in improved screening precision and efficiency.

Key considerations for customizing AI parameters include:

- Threshold Settings: Adjust relevance score thresholds to balance sensitivity and specificity according to review priorities. For instance, a lower threshold might maximize recall, capturing more relevant abstracts but risking increased false positives.

- Feature Selection: Configure which features (e.g., s, author affiliations, publication years) the AI emphasizes during evaluation, based on the review’s focus areas.

- Discipline-Specific Tuning: Incorporate domain-specific vocabularies and ontologies to guide the AI in understanding specialized terminology, which improves the accuracy of relevance assessments.

- Training Data Weighting: Assign different weights to various parts of the dataset if certain types of abstracts (e.g., recent studies or peer-reviewed articles) are prioritized.

Adjusting these parameters should be guided by validation results and iterative testing, ensuring that the AI system remains aligned with review standards and disciplinary expectations.

Creating Efficient Templates for Inputting Abstracts

Developing standardized templates for abstract input streamlines the data entry process, minimizes errors, and ensures consistency across datasets. Well-structured templates facilitate rapid data preparation and feeding into the AI system, which is crucial for maintaining throughput in large-scale review projects.

Effective templates typically include:

- Metadata Fields: Clearly defined sections for title, authors, s, publication year, and journal or conference name. Including these fields helps the AI contextualize each abstract.

- Abstract Text: A dedicated, unformatted text area for the main abstract body, ensuring the AI receives the core content without extraneous formatting.

- Standardized Labels or Tags: Use tags to denote relevance, review status, or disciplinary categories, if applicable, which can aid in supervised learning or evaluation calibration.

- Validation Checks: Incorporate built-in validation rules that flag missing information, unusual characters, or inconsistent formatting before submission.

To optimize input efficiency, consider utilizing automated data entry tools such as data import scripts or form-based interfaces that can extract information directly from sources like databases or publication repositories. Training review staff to adhere strictly to the template format ensures uniformity, reduces preprocessing workload, and enhances the AI system’s overall performance.

Techniques for Interpreting AI Output for Rapid Decision-Making

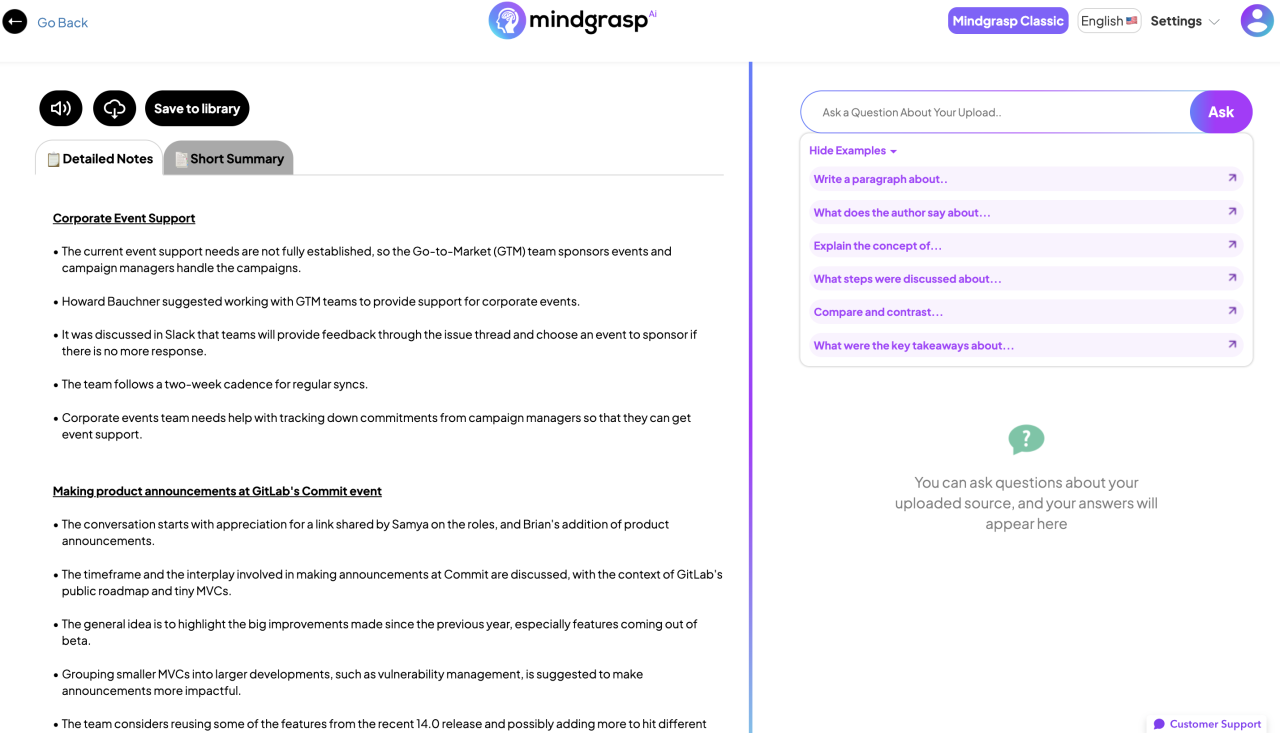

Efficiently interpreting AI-generated feedback is essential for accelerating the abstract review process. By understanding how to analyze summaries, relevance, and scoring mechanisms, reviewers can make informed decisions quickly, saving valuable time while maintaining high standards of selection. Mastering these techniques allows for seamless integration of AI insights into human judgment, leading to more consistent and objective evaluations.Interpreting AI outputs involves a systematic approach to understanding the different forms of feedback provided.

Summaries condense the core content of abstracts, highlighting key themes and findings. lists help identify the relevance of abstracts to specific topics or research areas. Relevance indicators and scoring systems offer quantitative measures that facilitate prioritization. Combining these elements enables reviewers to classify abstracts efficiently, focusing their attention on the most promising submissions.

Analyzing AI-Generated Summaries, s, and Relevance Indicators

Using AI tools that generate summaries, lists, and relevance scores provides a multi-layered perspective on each abstract. Reviewers should begin by examining summaries, which offer a quick overview of the research focus and significance. Consistency between the summary and the abstract’s main content indicates reliable AI output. Next, assess lists for the presence of relevant terms that match the review criteria, ensuring that the AI has accurately identified pertinent concepts.

Relevance indicators or scores typically range from low to high, representing the predicted importance or fit of each abstract within the review scope.To interpret these outputs effectively, compare the AI-generated summaries with the original abstracts to verify accuracy. Look for s that reflect core research themes, such as methodology, findings, or novel contributions. Use relevance scores as a guiding metric, prioritizing abstracts with higher scores for immediate review.

In cases where summaries or s appear inconsistent or incomplete, flag those abstracts for manual review or further AI clarification.

Prioritizing Abstracts Based on AI Scores and Summaries

Prioritization hinges on the combined assessment of AI scores, summaries, and relevance. Establish thresholds that classify abstracts into categories such as high, medium, or low priority based on relevance scores. For example, abstracts with scores above 0.8 on a 1.0 scale may automatically qualify as high priority, especially if summaries align with review focus areas.Use a structured approach, such as a scoring rubric, to weigh different AI outputs:

- High relevance scores paired with comprehensive summaries indicating clear research significance.

- Moderate scores with partial relevance, warranting a secondary manual review for potential inclusion.

- Low scores or summaries lacking pertinent information, which can be deprioritized or excluded.

This method ensures consistent decision-making and optimizes reviewer effort by focusing on abstracts most likely to meet the review criteria.

“Effective interpretation of AI outputs involves verifying the alignment of summaries, relevance scores, and relevance, enabling rapid prioritization without compromising review quality.”

Organizing AI Feedback for Quick Comparison

A structured framework is essential for rapid comparison of multiple abstracts based on AI feedback. Creating a standardized feedback template or table allows reviewers to quickly scan and contrast key metrics such as relevance scores, summary quality, and matches.An example organization method includes a table with columns:

| Abstract ID | Summary Quality | Relevance Score | Match | Prioritized (Yes/No) |

|---|---|---|---|---|

| 001 | Clear and concise | 0.92 | Yes | Yes |

| 002 | 0.75 | Partially | Potential | |

| 003 | Vague overview | 0.55 | No | No |

This visual organization facilitates quick decision-making, allowing reviewers to focus on high-priority abstracts first, compare secondary options, and document their judgments efficiently. Employing color coding or visual markers can further expedite the comparison process, especially when handling large volumes of submissions.

Challenges and Limitations of AI in Abstract Review

While AI-powered tools present significant advantages in accelerating and streamlining the abstract review process, they are not without their inherent challenges and limitations. Recognizing these issues is crucial for deploying AI effectively and ensuring the integrity of the review process. Understanding common pitfalls and strategies to address them can enhance the reliability of AI-assisted evaluations and facilitate continuous improvement.

AI systems in abstract review are susceptible to errors stemming from complex language, contextual nuances, and the diversity of scientific disciplines. These limitations can impact decision accuracy, potentially leading to the oversight of valuable research or the misclassification of submissions. As such, it is vital to implement strategies that mitigate these challenges and establish robust performance monitoring protocols.

Misinterpretation of Complex Language and Context

One of the primary pitfalls in AI-based abstract review is the misinterpretation of highly technical or nuanced language. Scientific abstracts often contain domain-specific terminology, abbreviations, and complex sentence structures that can challenge even advanced AI models. For example, an abstract discussing “neuroplasticity” may include subtle references to experimental techniques or statistical analyses that AI might misinterpret without contextual understanding.

Additionally, AI models may struggle with understanding the broader context or the significance of findings within a specific field. This can lead to inaccurate assessments of an abstract’s relevance or quality. For instance, an AI might overlook the importance of a novel methodological approach if it is not explicitly highlighted or if the language used deviates from training data patterns.

Strategies to Mitigate Errors and Enhance Accuracy

To address these challenges, several strategies can be employed to improve AI performance over time. Fine-tuning models with domain-specific corpora ensures better comprehension of specialized terminology and context, reducing misinterpretation risks. Incorporating human-in-the-loop systems allows expert reviewers to validate AI outputs, providing corrections that serve as feedback for ongoing model training.

Regularly updating training datasets with new abstracts, especially from rapidly evolving fields, helps AI stay current with emerging terminology and research trends. Applying advanced natural language processing techniques, such as context-aware transformers, can further enhance understanding of complex language structures. Moreover, setting confidence thresholds for AI decisions can flag uncertain evaluations for human review, maintaining a balance between speed and accuracy.

Documenting and Reviewing AI Performance Metrics

Maintaining detailed records of AI system performance is essential for ongoing improvement and accountability. Metrics such as precision, recall, F1-score, and accuracy provide quantitative insights into how well the AI model is performing in classifying abstracts or identifying relevant content. Tracking these metrics over time reveals trends and helps identify areas needing refinement.

Implementing a structured review process for AI outputs involves comparing automated assessments with human judgments, especially in borderline cases. Documenting instances of errors, false positives, and false negatives informs targeted updates to the model. Visualization tools like dashboards displaying performance metrics can facilitate ongoing monitoring, enabling data-driven decisions to enhance AI effectiveness. Ultimately, a commitment to continuous evaluation and adaptation ensures that AI systems evolve in alignment with the dynamic landscape of scientific research and abstract review standards.

Final Conclusion

In summary, leveraging AI to review abstracts offers a powerful means to accelerate research evaluation without compromising quality. Combining automated insights with human judgment creates a balanced approach that maximizes efficiency and accuracy. Implementing these strategies can lead to more timely decision-making and foster a more productive research environment in the long run.