Discovering how to analyze survey responses using AI opens a new frontier in understanding vast amounts of data efficiently and accurately. Leveraging advanced machine learning techniques can transform raw responses into valuable insights, enabling organizations to make informed decisions rapidly. This approach not only enhances the depth of analysis but also simplifies complex processes that were once manual and time-consuming.

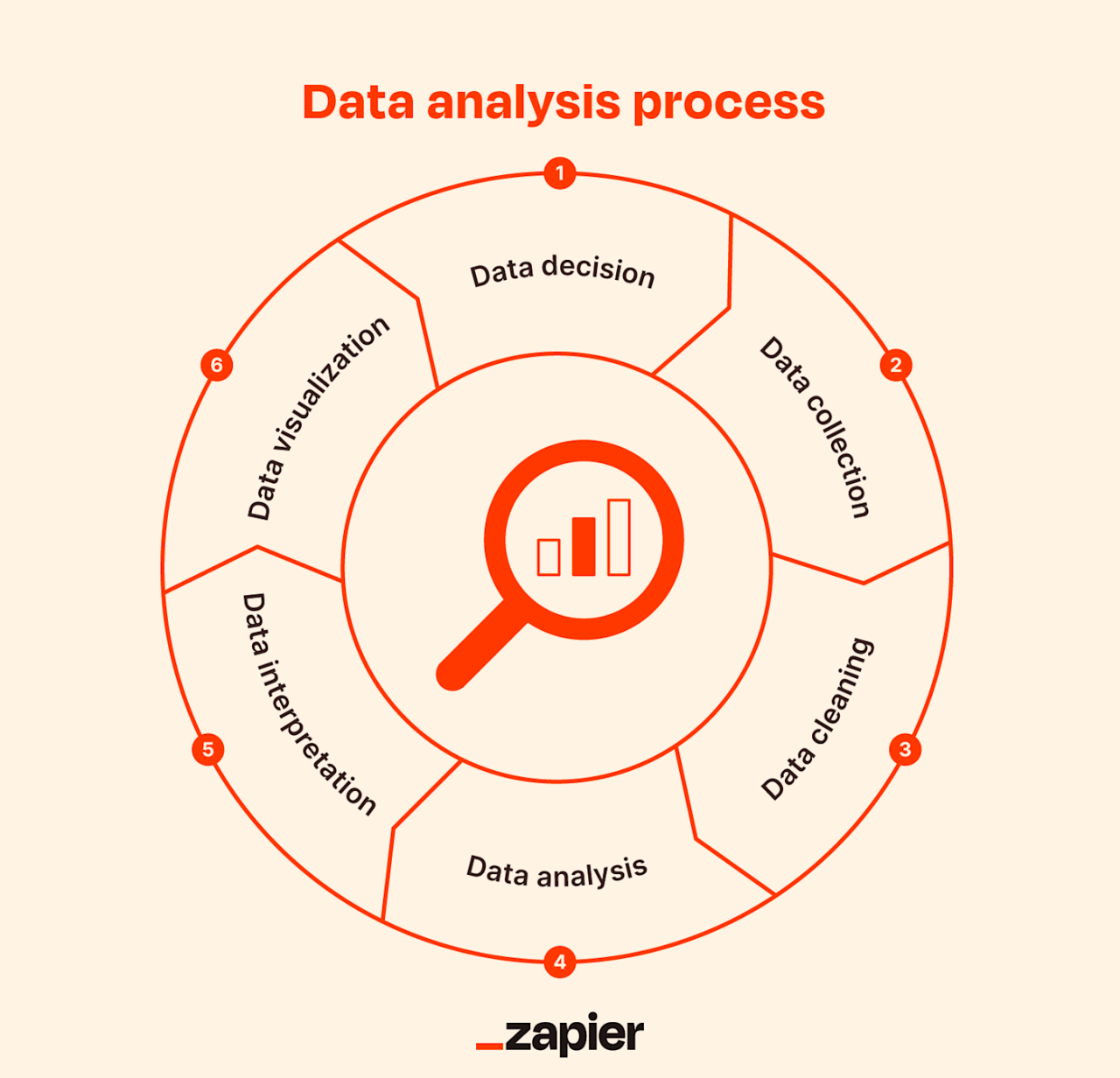

In this comprehensive guide, we explore the fundamental principles of AI-driven survey analysis, including data preparation, response classification, insight extraction, visualization, and ethical considerations. By integrating these techniques, users can maximize the value of survey data while ensuring privacy and ethical standards are maintained throughout the process.

Understanding the Basics of AI-Driven Survey Response Analysis

In the era of big data, leveraging artificial intelligence (AI) to analyze survey responses has become increasingly essential for extracting valuable insights efficiently. AI-driven analysis automates the process of interpreting vast amounts of textual and numerical data, enabling organizations to identify patterns, sentiments, and key themes with greater accuracy and speed. Understanding the fundamental principles behind these technologies is crucial for deploying effective survey analysis strategies that inform decision-making.

At its core, AI-based survey response analysis involves utilizing advanced algorithms and models designed to process and interpret both qualitative and quantitative data. These models can handle complex datasets, transforming raw responses into actionable insights without the need for extensive manual effort. This approach not only accelerates the analysis process but also enhances the consistency and objectivity of the interpretation, providing a comprehensive understanding of respondent feedback.

Fundamental Principles of AI in Survey Data Processing

The application of AI in survey response analysis relies on several key principles that enable accurate and meaningful interpretation of data:

- Data Preprocessing: Raw survey responses often contain noise, inconsistencies, or formatting issues. AI models begin with cleaning and standardizing the data to ensure meaningful analysis. This involves removing irrelevant information, correcting spelling errors, and normalizing numerical responses.

- Feature Extraction: Transforming textual responses into numerical or categorical features is essential for analysis. Techniques such as tokenization, stemming, and embedding convert language data into structured formats that machine learning models can interpret.

- Model Training and Learning: Machine learning models learn patterns from labeled or unlabeled data, adapting to nuances in responses. Supervised learning uses predefined labels (e.g., sentiment categories), while unsupervised learning discovers inherent structures like clusters or themes within responses.

- Interpretability and Explanation: Effective AI models provide insights into how conclusions are reached, ensuring transparency. This is vital for stakeholders to trust automated analysis results and make informed decisions based on them.

Machine Learning Models Interpreting Textual and Numerical Responses

Machine learning models form the backbone of AI-driven survey analysis by enabling the interpretation of diverse data types. These models analyze textual feedback and numerical ratings to identify underlying sentiments, themes, and trends.

Natural Language Processing (NLP) techniques allow models to understand human language, extracting sentiments, emotions, and key topics from textual responses. For example, sentiment analysis algorithms evaluate whether open-ended comments are positive, negative, or neutral, providing a quantitative measure of respondent satisfaction.

Numerical responses, such as rating scales or Likert items, are processed using statistical and regression models to detect correlations, trends, and outliers. These models help quantify the strength of sentiments or preferences expressed, facilitating comparative analysis across different respondent segments.

Common machine learning approaches include:

- Sentiment Analysis: Classifies responses based on emotional tone, often using NLP techniques like word embeddings and supervised classifiers.

- Topic Modeling: Identifies dominant themes and topics in textual responses through algorithms like Latent Dirichlet Allocation (LDA).

- Clustering: Groups similar responses together to uncover patterns or distinct respondent segments without prior labeling.

- Regression Analysis: Predicts numerical responses or ratings based on respondent features, revealing factors influencing specific outcomes.

Common AI Techniques for Large-Scale Survey Response Analysis

Analyzing large volumes of survey responses requires robust AI techniques capable of handling high data throughput while maintaining accuracy. These methods are designed to automate and streamline the extraction of insights from extensive datasets.

Deep learning models, such as neural networks, excel at capturing complex patterns in textual data, providing nuanced sentiment and thematic analysis at scale.

Among the most commonly employed techniques are:

- Natural Language Processing (NLP): Encompasses a range of algorithms for text tokenization, part-of-speech tagging, named entity recognition, and semantic understanding. These techniques are fundamental for converting unstructured responses into analyzable formats.

- Sentiment Analysis Algorithms: Use supervised classifiers trained on labeled datasets to automatically categorize responses by sentiment, enabling rapid analysis of open-ended feedback.

- Topic Modeling Methods: Such as LDA, which automatically discovers prevalent themes across large response datasets without manual coding, saving significant time and effort.

- Clustering Algorithms: Like k-means or hierarchical clustering, which group responses based on similarity, helping identify respondent segments or common concerns.

- Automated Text Summarization: Summarizes lengthy response sets, highlighting key points and trends, which facilitates quick review and interpretation.

These AI techniques enable organizations to process tens of thousands of responses efficiently, ensuring that insights are timely, accurate, and comprehensive, thus driving informed strategic actions based on respondent feedback.

Preparing Survey Data for AI Analysis

Effective AI-driven analysis of survey responses begins with meticulous preparation of the raw data. This process involves cleaning, organizing, and formatting the data to ensure accuracy, consistency, and compatibility with analytical tools. Proper preparation not only enhances the quality of insights derived but also streamlines the overall analysis workflow, making it easier to detect patterns, trends, and sentiments within large datasets.

Preparing survey data entails a series of structured steps to transform unstructured or semi-structured responses into a clean, analyzable format. This foundation enables AI algorithms to process data efficiently, whether for quantitative analysis or for interpreting open-ended responses. The following sections Artikel the essential steps and best practices for organizing survey data for AI-based analysis.

Cleaning and Organizing Raw Survey Data

Clean data forms the backbone of reliable AI analysis. The initial step involves identifying and rectifying inconsistencies, inaccuracies, and incomplete responses. This process ensures that the dataset accurately reflects the surveyed population and reduces bias introduced by errors or outliers.

- Removing duplicates: Detect and eliminate repeated responses that may skew results, especially in online surveys where multiple submissions can occur.

- Addressing missing data: Decide on a strategy for handling incomplete responses, such as imputation, exclusion, or flagging for further review.

- Standardizing formats: Convert all data into uniform formats—dates, numerical values, and categorical responses—so that AI models can interpret them correctly.

- Filtering out irrelevant data: Remove responses that do not pertain to the survey objectives or contain irrelevant information.

After cleaning, organizing involves structuring the data into a logical framework that supports analysis. This may include categorizing responses, creating identifiers for respondents, and tagging data points with relevant metadata, facilitating efficient AI processing.

Coding Open-Ended Responses into Analyzable Formats

Open-ended responses offer rich qualitative insights but require transformation into structured data to be usable by AI models. Coding involves assigning codes or labels to textual data, enabling quantitative or semi-quantitative analysis while preserving nuanced meaning.

- Develop a coding scheme: Establish categories or themes based on the response content, such as sentiment, topics, or specific s.

- Manual coding: Human coders review responses and assign relevant codes, ensuring contextually accurate categorization, essential for complex or nuanced responses.

- Automated coding: Utilize Natural Language Processing (NLP) tools to identify themes, hashtags, or sentiment indicators, increasing efficiency for large datasets.

- Creating codebooks: Document definitions and examples for each code to ensure consistency across coders and datasets.

Structured coding allows open-ended responses to be summarized in formats compatible with statistical or machine learning algorithms, such as binary indicators, ordinal scales, or categorical variables.

Formatting Data into Tables for Efficient AI Ingestion

Proper tabular formatting is critical for seamless data ingestion by AI tools. Structuring data into tables with clear, consistent columns enhances readability and facilitates automated processing workflows.

Typically, tables should include up to four key columns that capture essential aspects of survey responses:

- Respondent ID: Unique identifiers for each participant to maintain response traceability.

- Survey Question or Variable: The specific question or attribute being measured, such as ‘Satisfaction Rating’ or ‘Open-Ended Feedback.’

- Response Value: The answer provided, formatted as numeric scores, categorical labels, or coded responses.

- Metadata or Additional Context: Supplementary information such as response timestamp, demographic data, or response quality indicators.

For example, a table for customer satisfaction survey responses might include columns for Respondent ID, Satisfaction Score (1-5), Feedback Comments (coded as positive, neutral, negative), and Response Date. This structured format allows AI to swiftly analyze sentiment trends, identify key themes, and generate actionable insights.

Ensuring consistent data entry, using standardized formats, and maintaining clear column definitions are vital for maximizing AI analysis accuracy and efficiency.

Categorizing and Classifying Responses with AI

Effective categorization and classification of survey responses are vital for extracting meaningful insights from large datasets. AI-driven techniques enable automated, accurate, and scalable analysis, reducing manual effort and increasing consistency. Leveraging machine learning models trained specifically for response categorization allows organizations to sort data into relevant themes, sentiments, or topics swiftly. This process enhances the interpretability of survey results and supports more informed decision-making.In this section, we explore how AI models can be trained for response categorization, the procedures for labeling responses to improve classification accuracy, and provide illustrative examples through sample tables outlining response categories and their typical examples.

Training AI Models for Response Categorization

Training AI models to categorize survey responses involves several key steps that ensure the models can accurately distinguish between different response types. The process begins with selecting an appropriate machine learning algorithm—commonly natural language processing (NLP) techniques such as support vector machines (SVM), random forests, or deep learning models like transformers.The training process includes:

- Data Collection: Gathering a representative sample of responses that cover the range of possible answers.

- Feature Extraction: Transforming textual responses into numerical vectors using techniques like TF-IDF, word embeddings, or transformer-based embeddings such as BERT.

- Model Training: Feeding labeled responses into the algorithm to learn the patterns associated with each category. During training, the model adjusts its parameters to minimize misclassification.

- Model Evaluation: Validating the model’s performance on a separate dataset to ensure it generalizes well to unseen responses.

- Deployment and Monitoring: Implementing the trained model within an analysis pipeline and continuously monitoring its accuracy, updating it with new data as needed to maintain high performance.

This approach ensures that the AI system adapts effectively to the nuances and diversity inherent in survey responses, providing a robust foundation for accurate classification.

Labeling Responses to Enhance Classification Accuracy

Accurate labeling of responses is crucial for training effective AI classification models. Labels serve as the ground truth, guiding the AI in recognizing patterns associated with specific categories. The labeling process can be manual, semi-automated, or crowdsourced, depending on the dataset size and complexity.Key procedures include:

- Establishing Clear Label Definitions: Defining explicit criteria for each response category to ensure consistency among annotators.

- Creating a Labeling Guide: Providing detailed instructions, examples, and edge cases to standardize the labeling process.

- Annotation Process: Assigning responses to human labelers or using annotation tools that facilitate efficient labeling while maintaining quality control.

- Quality Assurance: Implementing validation steps such as double annotation, consensus meetings, or inter-annotator agreement metrics, like Cohen’s Kappa, to minimize errors.

- Iterative Refinement: Updating labels and refining categories based on initial model performance and feedback, ensuring the labels accurately reflect the intended classifications.

Proper labeling enhances the model’s learning process, leading to increased accuracy and reliability in categorization results.

Sample Response Categories and Examples

Below is a sample table illustrating typical response categories used in survey analysis, accompanied by example responses that exemplify each category. These examples demonstrate how responses are grouped, aiding in the development of classification models.

| Category | Example Responses |

|---|---|

| Positive Feedback |

|

| Negative Feedback |

|

| Suggestions for Improvement |

|

| Neutral Responses |

|

This structured approach to response categorization facilitates accurate training of AI models, enabling faster and more precise analysis of large-scale survey data. Proper labels and clear category definitions are fundamental to developing an effective AI-driven response classification system, ultimately leading to actionable insights and improved decision-making.

Visualizing Survey Data Using AI-Generated Charts and Graphs

Effective visualization plays a crucial role in transforming raw survey responses into clear, actionable insights. Utilizing AI tools to generate charts and graphs streamlines this process, enabling analysts to quickly interpret complex datasets. Visual representations not only facilitate better understanding for stakeholders but also enhance decision-making by illustrating trends, patterns, and correlations that may otherwise remain hidden within raw data.

As AI-driven visualization continues to evolve, it offers increasingly sophisticated and customized ways to present survey findings in an engaging and accessible manner.Organizing survey data appropriately before visualization ensures that insights are accurately represented and easily interpretable. Proper data preparation involves structuring responses into multi-column formats, where each column corresponds to a specific question or response category. This organization simplifies the process of creating visualizations, as AI algorithms can readily access and analyze these standardized data tables.

Additionally, clear data structuring minimizes errors and inconsistencies, resulting in more reliable and meaningful visual outputs.

Creating Visual Representations of Survey Insights

To craft impactful visualizations, it is essential to select the appropriate chart or graph type based on the nature of the data and the insights being communicated. Common choices include bar charts for comparing categories, pie charts for illustrating proportions, line graphs for tracking trends over time, and heatmaps for identifying patterns across multiple variables. AI tools can automate the selection process by analyzing data characteristics and recommending the most effective visualization formats.When generating charts and graphs with AI, it is important to customize visual parameters to enhance clarity.

This includes adjusting color schemes for better contrast, labeling axes and data points clearly, and ensuring that visual scales accurately reflect data ranges. Additionally, AI can help identify outliers or anomalies in the data, which can then be highlighted within visualizations to draw attention to specific insights. Incorporating interactive elements, where possible, allows users to explore data layers and gain deeper understanding.

Designing Responsive HTML Tables and Infographics

Responsive HTML tables serve as foundational elements for displaying survey data in a structured manner, especially when detailed information needs to be presented alongside visualizations. These tables should be designed to adapt seamlessly across various devices, ensuring accessibility and readability. Strategies include utilizing flexible layouts, such as percentage-based widths, and leveraging media queries to optimize display on different screen sizes.Infographics combine visual elements with concise textual information to communicate survey results effectively.

Designing engaging infographics involves selecting relevant data points, visual themes, and icons that align with the survey’s context. It is vital to maintain a balance between visual appeal and informational clarity, avoiding clutter while emphasizing key insights. AI-powered tools can assist in automatically generating infographics based on datasets, ensuring consistency and professionalism in presentation.

Organizing Data Before Visualization into Multi-Column Formats

Proper data organization is fundamental for producing meaningful visualizations. Structuring responses into multi-column formats involves categorizing data into distinct fields such as respondent demographics, response categories, and response counts. This format facilitates efficient data analysis by AI algorithms, enabling rapid aggregation, filtering, and comparison of responses.Before visualization, it is recommended to clean and normalize data to eliminate inconsistencies and duplicates.

Multi-column data structures support this process by clearly delineating different data dimensions. For example, responses to a satisfaction survey can be organized into columns labeled “Respondent ID,” “Age,” “Region,” “Satisfaction Level,” and “Comments.” Such organization allows AI tools to easily generate insights, such as identifying regional differences in satisfaction or age-related response trends, which can then be visualized through tailored charts and graphs for maximum clarity.

Automating Reporting and Summarizing Responses

In large-scale surveys, manual analysis and reporting can be time-consuming and prone to oversight. Automated reporting leverages AI’s capabilities to efficiently generate concise summaries and comprehensive reports from extensive response datasets, enabling organizations to quickly identify key insights and trends. This process not only accelerates decision-making but also enhances consistency and accuracy in report generation.

Using AI-driven tools for summarizing responses involves sophisticated natural language processing (NLP) algorithms that can interpret, distilled, and present essential information from vast amounts of textual data. These automated summaries provide stakeholders with clear, actionable insights without the need to sift through individual responses, making the entire analysis workflow more efficient and scalable.

Generating Summaries of Large Response Sets Automatically

Efficiently summarizing large survey datasets requires structured procedures that AI systems can execute seamlessly. The typical steps involve data preprocessing, response clustering, key phrase extraction, and sentiment analysis. These steps enable AI to identify dominant themes, common sentiments, and notable outliers within the responses, providing a high-level overview of the data.

For example, AI algorithms can process thousands of open-ended responses to extract frequently mentioned s and phrases, which are then aggregated into a summarized report. This process often involves the use of advanced NLP techniques such as topic modeling and semantic analysis, which help to reveal underlying patterns that might not be immediately apparent through manual review.

Producing Concise Reports Highlighting Key Trends

AI can generate succinct, well-structured reports that emphasize the most significant insights from survey data. These reports typically include visual summaries like charts and graphs, as well as textual highlights that capture trends, sentiment shifts, or emerging themes. The goal is to deliver a clear narrative that guides decision-makers to understand critical findings rapidly.

For instance, an AI-generated report may feature a summary paragraph indicating a major increase in positive sentiment toward a new product feature, supported by a bar chart showing sentiment distribution over time. Key phrases such as “improved user satisfaction” or “concerns about usability” can be extracted and presented as bullet points or side notes for quick reference.

HTML Report Templates with Table Summaries and Blockquote Extracts

Below are example sections that can be integrated into automated reports to effectively communicate insights:

| Summary Section | Content |

|---|---|

| Key Findings | AI-generated summaries often include the top three to five key insights derived from response data, such as predominant sentiments, recurring themes, or notable shifts compared to previous surveys. |

| Statistical Overview | Tables presenting response distributions, average ratings, or sentiment scores provide quantitative context that complements textual summaries, enabling comprehensive understanding at a glance. |

| Highlighted Quotes | Selected respondent comments are displayed within blockquotes to illustrate specific points or sentiments, adding qualitative depth to the report. |

Effective automated reporting synthesizes quantitative data and qualitative insights, providing stakeholders with a comprehensive yet concise overview that facilitates data-driven decisions.

Ensuring Data Privacy and Ethical Use in AI Analysis

Maintaining respondent confidentiality and adhering to ethical standards are fundamental when employing AI for survey response analysis. As organizations increasingly leverage AI to derive insights from sensitive data, implementing best practices for privacy protection and ethical responsibility ensures trustworthiness, compliance with regulations, and respect for respondents’ rights. This section explores essential techniques and steps to safeguard data privacy and promote ethical AI practices throughout the survey analysis process.AI-driven survey analysis involves processing vast amounts of personal and potentially sensitive information.

Without proper safeguards, there is a risk of exposing confidential data or misusing information, which can lead to legal repercussions and damage an organization’s reputation. Therefore, establishing clear protocols and adhering to best practices helps ensure that AI tools are used responsibly, ethically, and in compliance with data protection standards such as GDPR or CCPA.

Best Practices for Maintaining Respondent Confidentiality During AI Processing

Ensuring the confidentiality of survey participants involves implementing a combination of technical measures and organizational policies. These practices are crucial for protecting data from unauthorized access or breaches during AI analysis:

- Restrict Access to Data: Limit data access to authorized personnel only, employing role-based permissions and secure authentication protocols.

- Secure Data Storage: Use encrypted storage solutions for raw and processed data to prevent unauthorized retrieval or tampering.

- Employ Secure Data Transfer: When transmitting data for analysis, utilize encrypted channels such as SSL/TLS to safeguard information in transit.

- Implement Audit Trails: Maintain logs of data access and processing activities to enhance accountability and facilitate breach detection.

- Regular Security Training: Educate team members on data protection standards and potential security risks associated with AI tools.

Methods for Anonymizing Sensitive Data Before Analysis

Anonymization involves modifying data to prevent the identification of individual respondents while preserving the dataset’s analytical value. Implementing effective anonymization techniques is essential for protecting privacy:

Common methods include:

| Technique | Description | Application Examples |

|---|---|---|

| Data Masking | Obscuring identifiable information by replacing it with fictitious or masked values. | Replacing real names with pseudonyms in response datasets. |

| Aggregation | Combining individual responses into grouped data to prevent identification. | Reporting age ranges instead of exact ages or aggregating responses by geographic regions. |

| Data Suppression | Removing specific data points or fields that could lead to identification. | Omitting IP addresses or detailed demographic data from analysis outputs. |

| Perturbation | Adding statistical noise to data to mask individual responses while maintaining overall data trends. | Applying slight variations to income or expenditure figures in surveys. |

Combining these methods ensures that sensitive respondent identifiers are adequately protected without compromising the integrity of the analysis.

Steps to Verify the Ethical Application of AI Tools in Survey Analysis

Applying AI ethically requires deliberate verification processes to prevent misuse and ensure alignment with ethical standards. The following steps are recommended to uphold responsible AI practices:

- Establish Ethical Guidelines and Policies: Develop clear policies that define acceptable AI usage, focusing on privacy, fairness, and transparency.

- Conduct Ethical Impact Assessments: Evaluate how AI models and analysis methods may affect respondent privacy, data bias, and overall fairness before deployment.

- Perform Bias and Fairness Checks: Regularly audit AI algorithms to identify and mitigate biases that could lead to unfair or discriminatory outcomes.

- Obtain Informed Consent: Ensure that survey participants are fully aware of how their data will be used, including AI-driven analysis processes, and obtain explicit consent.

- Implement Transparency Measures: Clearly communicate the AI methods utilized, the purpose of data analysis, and the measures in place to protect privacy to stakeholders and respondents.

- Monitor and Review AI Outcomes: Continuously monitor outputs for ethical compliance, and establish protocols for addressing potential ethical concerns or unintended consequences.

“Ethical AI in survey analysis is not a one-time effort but an ongoing commitment to respect privacy, promote fairness, and uphold trust.”

Comparing AI Tools for Survey Response Analysis

Choosing the appropriate artificial intelligence platform for survey response analysis depends on several factors, including the size and complexity of the survey data, desired features, and budget constraints. With a multitude of AI tools available, understanding their core capabilities and limitations is essential for making informed decisions that align with your analytical needs.

This section provides a structured comparison of popular AI solutions, criteria for selecting the most suitable platform based on specific survey requirements, and an overview of each tool’s advantages and limitations.

Popular AI Platforms for Survey Response Analysis

Below is a comparison table highlighting some of the leading AI tools used in survey response analysis, focusing on key features relevant for different survey scenarios:

| Platform | Key Features | Ease of Use | Customization Options | Integration Capabilities | Pricing |

|---|---|---|---|---|---|

| IBM Watson Studio | Advanced NLP, data visualization, model training, automation tools | Moderate – requires some technical knowledge | High – customizable models and workflows | Extensive integrations with cloud and on-premise systems | Subscription-based, enterprise pricing varies |

| Google Cloud AI Platform | Scalable machine learning, NLP, AutoML, pre-trained models | Moderate – suitable for users with cloud experience | High – tailored models and APIs | Seamless integration with Google Cloud services | Pay-as-you-go, scalable |

| Microsoft Azure Cognitive Services | Natural language processing, sentiment analysis, translation | Easy to moderate – user-friendly interfaces | Moderate – customizable via APIs and SDKs | Strong integration with other Azure services and external systems | Subscription-based, tiered pricing |

| MonkeyLearn | Text analysis, classification, sentiment analysis, easy-to-use interface | High – designed for non-technical users | Moderate – custom classifiers and workflows | API integrations, Google Sheets, Slack, and more | Subscription plans, free tier available |

| RapidMiner | Data mining, machine learning, automation, visual workflows | Moderate – visual interface simplifies processes | High – extensive options for customization | Connects with various data sources and scripting tools | Subscription-based with free tier |

Criteria for Selecting AI Solutions Based on Survey Size and Complexity

Choosing an AI platform should be driven by the specific needs of your survey project, including data volume, response complexity, and desired analysis depth. Consider the following criteria to determine the most appropriate solution:

- Survey Size: For small to medium surveys with a few hundred responses, user-friendly tools like MonkeyLearn or RapidMiner may suffice. In contrast, large-scale surveys with thousands or millions of responses benefit from scalable cloud solutions like Google Cloud AI or IBM Watson.

- Data Complexity: Simple sentiment or analysis can be handled efficiently by platforms with pre-trained models. Complex tasks such as multi-label classification, nuanced sentiment detection, or custom modeling require platforms with advanced customization options like IBM Watson Studio or Azure Cognitive Services.

- Integration Needs: Consider whether the platform can seamlessly connect with your existing data management systems, survey tools, or dashboards.

- Technical Expertise: Evaluate the available skillset within your team. Non-technical users may prefer intuitive, drag-and-drop interfaces, whereas technical teams might opt for platforms offering extensive API access and customization capabilities.

- Budget Constraints: Balance the platform’s features with your budget, noting that enterprise solutions tend to be more expensive but offer greater flexibility and scalability.

Advantages and Limitations of Each Tool

Understanding the strengths and weaknesses of each AI platform helps in aligning them with your survey analysis goals:

IBM Watson Studio:

- Advantages:

- Robust NLP and machine learning capabilities suitable for complex analyses

- Highly customizable workflows and models

- Strong enterprise support and integration options

- Limitations:

- Requires technical expertise to maximize features

- Higher cost for advanced functionalities

Google Cloud AI Platform:

- Advantages:

- Scalable and flexible, ideal for large datasets

- Access to Google’s advanced AI models and AutoML tools

- Excellent integration with other Google services

- Limitations:

- Can be complex for users unfamiliar with cloud platforms

- Costs can escalate with scale and usage

Microsoft Azure Cognitive Services:

- Advantages:

- Simple API-based approach suitable for quick deployment

- Good for integrating sentiment analysis and language understanding

- Extensive documentation and support

- Limitations:

- Less customizable compared to platforms like Watson

- Pricing can become significant with high-volume analysis

MonkeyLearn:

- Advantages:

- User-friendly interface designed for non-technical users

- Quick setup for common text analysis tasks

- Affordable pricing models with free options

- Limitations:

- Less flexibility for highly specialized or complex analyses

- Limited customization compared to enterprise platforms

RapidMiner:

- Advantages:

- Visual workflows simplify complex data processes

- Supports extensive data sources and analysis types

- Suitable for both beginners and experienced data scientists

- Limitations:

- Steeper learning curve for advanced features

- Cost may be prohibitive for small organizations

End of Discussion

In conclusion, harnessing AI for survey response analysis offers a powerful toolkit for extracting actionable insights from large datasets. By understanding the available methods and tools, organizations can improve their decision-making processes and achieve more precise and meaningful results. Embracing these technologies paves the way for smarter, faster, and more ethical survey analysis practices in the future.