Exploring how to automate data analysis with AI reveals a transformative approach to handling complex datasets, enabling businesses and analysts to derive insights more swiftly and accurately than ever before. By integrating artificial intelligence into data workflows, organizations can reduce manual effort, minimize errors, and accelerate decision-making processes.

This comprehensive overview covers essential steps from setting up AI-driven systems and preprocessing data to implementing algorithms, automating workflows, and visualizing results. Understanding these components empowers users to leverage AI effectively for smarter data analysis.

Overview of Automating Data Analysis with AI

Automating data analysis with artificial intelligence (AI) has revolutionized the way organizations handle vast amounts of information. By leveraging AI, businesses can streamline their data workflows, reduce manual effort, and extract actionable insights more rapidly and accurately. This process involves integrating advanced algorithms that can process, interpret, and visualize data with minimal human intervention, leading to increased efficiency and improved decision-making capabilities.

The fundamental concept behind data automation using AI is to utilize machine learning models and intelligent systems that can learn from data patterns, identify anomalies, predict trends, and generate reports automatically. This not only accelerates analysis but also enhances the precision of outcomes by minimizing human errors and biases inherent in manual processes. The typical workflow for integrating AI into data analysis involves data collection, preprocessing, model training, automation deployment, and continuous monitoring, ensuring that the system adapts to new data and evolving patterns seamlessly.

Fundamental Concepts of Data Automation Using AI

At its core, data automation with AI relies on the deployment of algorithms capable of learning from datasets through supervised, unsupervised, or reinforcement learning techniques. These models are trained to recognize complex patterns, classify data points, forecast future outcomes, and automate repetitive tasks such as data cleaning, categorization, and report generation. The integration of natural language processing (NLP) enables AI to interpret unstructured data like text and speech, further broadening automation capabilities.

Typical Workflow in AI-Driven Data Analysis

The workflow of integrating AI into data analysis is structured and iterative, typically involving these key stages:

- Data Collection: Gathering relevant data from various sources such as databases, APIs, or sensor inputs.

- Data Preprocessing: Cleaning, transforming, and normalizing raw data to ensure quality and consistency for analysis.

- Model Development: Selecting appropriate AI models, training them with labeled or unlabeled data, and validating their performance.

- Automation Deployment: Implementing trained models within automated pipelines to perform ongoing analysis tasks without manual intervention.

- Monitoring and Maintenance: Continuously evaluating model performance, updating with new data, and adjusting algorithms as needed to maintain accuracy and relevance.

Benefits of Automating Data Analysis with AI

Automating data analysis through AI offers several significant advantages:

- Enhanced Efficiency: AI systems can process large datasets faster than manual methods, significantly reducing turnaround times for generating insights.

- Improved Accuracy: Automated models minimize human errors and ensure consistent application of analytical procedures, leading to more reliable results.

- Cost Savings: Reduced need for extensive manual labor lowers operational costs associated with data processing and analysis.

- Scalability: AI-driven systems can easily scale to accommodate increasing data volumes without proportional increases in resource allocation.

- Real-Time Insights: Automation enables near-instant data processing, facilitating timely decision-making in dynamic environments such as finance or healthcare.

Key AI Tools for Data Automation

Numerous AI tools facilitate data automation by offering specialized functions for data handling, modeling, and visualization. Below is a comprehensive table outlining some of the most prevalent tools, their core features, and typical use cases:

| Tool Name | Features | Use Cases |

|---|---|---|

| RapidMiner | End-to-end data science platform with visual workflows, automated machine learning, and integration capabilities. | Data preprocessing, predictive modeling, and deploying machine learning models in business analytics. |

| Google Cloud AutoML | Automates model training with minimal coding, supports vision, NLP, and structured data tasks, scalable cloud infrastructure. | Custom model development for image recognition, text classification, and structured data analysis in enterprise environments. |

| DataRobot | Automated machine learning platform with model deployment, monitoring, and interpretability tools. | Rapid prototyping of predictive models, fraud detection, customer segmentation, and operational analytics. |

| KNIME Analytics Platform | Open-source data analytics, reporting, and integration platform with extensive extension support for AI workflows. | Data mining, workflow automation, and collaborative analytics across different data sources. |

| Azure Machine Learning | Comprehensive cloud-based platform with automated ML, experiment tracking, and deployment options. | Developing and operationalizing AI solutions for business applications, such as predictive maintenance and sales forecasting. |

Setting Up AI-Driven Data Automation Systems

Implementing an effective AI-driven data automation system requires meticulous preparation of data sources, careful selection of models aligned with data characteristics and analysis objectives, and thoughtful configuration to ensure scalability and robustness. This process lays the foundation for accurate, efficient, and scalable automated insights that can significantly enhance decision-making processes across various domains.

Establishing a reliable and efficient automation pipeline involves multiple coordinated steps. From data collection and cleaning to selecting suitable AI models and system configuration, each stage plays a pivotal role in ensuring the system operates seamlessly under real-world conditions. By adhering to best practices, organizations can maximize the potential of AI-driven data analysis while maintaining flexibility for future expansion and adaptation.

Preparing Data Sources for AI Processing

Quality and structure of data sources are critical for effective AI processing. Proper preparation involves several key steps to ensure data is accurate, consistent, and ready for analysis:

- Data Collection: Gather data from diverse sources such as databases, cloud storage, APIs, or IoT devices. Ensure data is comprehensive and relevant to the analysis goals.

- Data Cleaning: Remove duplicates, correct errors, and handle missing values using techniques like imputation or filtering. Consistent data improves model accuracy.

- Data Transformation: Normalize or standardize data to bring features to comparable scales. Convert categorical data into numerical formats using one-hot encoding or label encoding.

- Data Integration: Combine data from multiple sources ensuring alignment in formats, units, and time frames. Data integration creates a unified dataset suitable for analysis.

- Data Validation: Verify data integrity through statistical summaries and visualizations to detect anomalies or inconsistencies prior to processing.

Selecting Appropriate AI Models Based on Data Type and Goals

Matching AI models to data types and analysis objectives is essential for obtaining meaningful insights. The selection process considers the nature of the data, complexity of the task, and desired outcomes:

- Supervised Learning Models: Ideal for labeled datasets where the goal is prediction or classification. Examples include regression models for numerical output and classification algorithms like Random Forest or Support Vector Machines.

- Unsupervised Learning Models: Suitable for unlabeled data to uncover hidden patterns, clusters, or anomalies. Techniques include K-Means clustering, Principal Component Analysis (PCA), and Autoencoders.

- Semi-supervised and Reinforcement Learning: Applied in cases where limited labeled data is available or dynamic decision environments exist, such as recommendation systems or game-playing AI.

- Model Complexity and Interpretability: Balance the complexity of the model with the need for transparency. For instance, linear models offer interpretability, while deep learning models excel at capturing complex patterns but require more resources.

Recommendation: Conduct pilot testing with multiple models to evaluate performance metrics such as accuracy, precision, recall, or F1-score, ensuring the chosen model aligns with specific analysis goals and data properties.

Flowchart of Stages from Data Collection to Automated Insights

The process from initial data collection to generating automated insights involves several interconnected stages. A clear flowchart helps visualize the sequence and dependencies:

| Stage | Description |

|---|---|

| Data Collection | Gather raw data from various sources such as databases, sensors, or third-party APIs. |

| Data Cleaning & Validation | Remove errors, handle missing data, and verify data integrity to ensure quality. |

| Data Transformation & Integration | Normalize, encode, and combine datasets into a unified format suitable for analysis. |

| Model Selection & Training | Choose appropriate AI models, train them on prepared data, and validate performance. |

| Deployment & Automation | Implement models within automated pipelines, integrating with data workflows for real-time or batch processing. |

| Insight Generation & Reporting | Analyze model outputs to generate actionable insights through dashboards or alerts. |

Best Practices for Configuring AI Systems for Scalability and Robustness

Designing AI systems for scalability and robustness ensures they can handle increasing data volumes and evolving business needs without compromising performance. Key practices include:

- Modular Architecture: Develop systems with modular components allowing independent updates and maintenance, facilitating scalability.

- Automated Monitoring: Implement continuous monitoring of system performance and data quality to detect anomalies early and trigger alerts for adjustments.

- Resource Optimization: Use distributed computing frameworks such as Apache Spark or Kubernetes to manage large-scale data processing efficiently.

- Model Retraining & Updates: Establish regular retraining schedules to incorporate new data, ensuring models remain accurate and relevant.

- Redundancy & Fault Tolerance: Design systems with backup processes and failover mechanisms to minimize downtime and data loss.

- Security & Compliance: Incorporate data security measures and adhere to regulatory standards to maintain system integrity and trustworthiness.

Data Preprocessing for Automated AI Analysis

Effective data preprocessing is a foundational step in automating AI-driven data analysis. Raw data, often sourced from diverse systems, can include inconsistencies, missing values, and noise that hinder accurate modeling. Preparing data through systematic cleaning and transformation ensures that AI models receive high-quality inputs, leading to more reliable insights and improved performance. Proper preprocessing not only enhances model accuracy but also reduces computational overhead and facilitates smoother automation workflows.

In this section, we explore essential techniques for cleaning and transforming raw datasets, methods for feature engineering to boost model effectiveness, and a structured guide to preparing datasets for seamless automation. These practices are vital for establishing a robust data pipeline that supports continuous and efficient AI analysis.

Cleaning and Transforming Raw Data for AI Consumption

To optimize raw data for AI analysis, it is crucial to address inconsistencies, inaccuracies, and irrelevant information. Data cleaning involves identifying and correcting errors, handling missing values, and removing duplicates. Data transformation processes convert data into formats suitable for modeling, such as normalization, encoding categorical variables, and scaling numerical features. These steps ensure that the data aligns with the assumptions of different algorithms and reduces the likelihood of bias or skewed results.

Techniques for Feature Engineering to Improve Model Performance

Feature engineering enhances the predictive power of models by creating new features or modifying existing ones to better capture underlying patterns. Techniques include creating interaction terms, binning continuous variables, extracting date or time components, and applying dimensionality reduction methods such as Principal Component Analysis (PCA). Well-crafted features can significantly improve model accuracy, interpretability, and robustness, making them indispensable in automated data analysis workflows.

Step-by-Step Guide for Preparing Datasets Before Automation

- Data Collection: Gather raw data from all relevant sources ensuring completeness and relevance.

- Data Inspection: Explore the dataset to identify missing values, outliers, and inconsistencies.

- Data Cleaning: Remove duplicates, correct errors, and handle missing data through imputation or removal.

- Data Transformation: Normalize or scale numerical data, encode categorical variables (e.g., one-hot encoding), and convert data types as needed.

- Feature Engineering: Create new features, select relevant features, and reduce dimensionality to improve model inputs.

- Data Partitioning: Split the dataset into training, validation, and testing subsets to enable robust model evaluation.

- Validation and Finalization: Verify the integrity of the preprocessed data before integrating it into automated workflows.

Common Preprocessing Techniques and Their Purposes

| Technique | Purpose |

|---|---|

| Missing Value Imputation | Fills in or estimates missing data points to maintain dataset completeness and prevent bias. |

| Data Normalization/Scaling | Standardizes data ranges to improve model convergence and performance, especially for algorithms sensitive to data scale. |

| Outlier Detection and Removal | Identifies anomalies that may skew analysis, allowing for correction or exclusion to enhance model robustness. |

| Encoding Categorical Variables | Converts non-numeric data into numerical formats, facilitating algorithm compatibility and effective learning. |

| Dimensionality Reduction | Reduces feature space complexity, improves computational efficiency, and mitigates multicollinearity issues. |

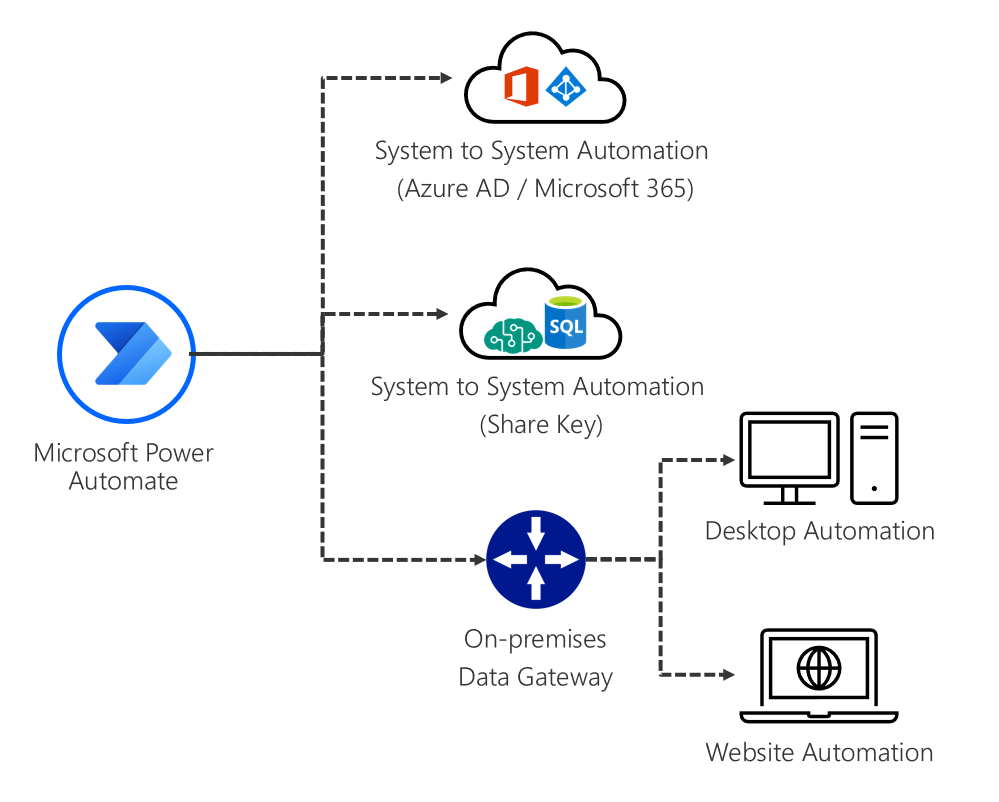

Implementing AI Algorithms for Data Insights

![[무료 MS RPA를 활용한 업무 자동화] Power Automate Desktop Essentials 강의 | 오마스 - 인프런 [무료 MS RPA를 활용한 업무 자동화] Power Automate Desktop Essentials 강의 | 오마스 - 인프런](https://teknobits.web.id/wp-content/uploads/2025/09/943b02f7-f249-431b-a21d-91e8d96c3ce0-1.png)

Transforming raw data into actionable insights hinges upon the effective implementation of AI algorithms tailored to specific analysis tasks. Selecting the appropriate algorithm, training it efficiently, validating its performance, and seamlessly integrating it into existing systems are critical steps in establishing a robust automated data analysis pipeline. This process not only enhances decision-making but also streamlines workflows, enabling organizations to derive maximum value from their data assets.

In this section, we explore the selection of popular algorithms suited for diverse data analysis objectives, Artikel best practices for training and validating these models within automated systems, and provide clear guidance on their integration into operational environments. Understanding these components ensures that AI-driven data analysis becomes a reliable and scalable component of your data infrastructure.

Popular AI Algorithms for Data Analysis Tasks

Different data analysis tasks require algorithms optimized for classification, regression, clustering, or anomaly detection. Recognizing the strengths of each algorithm facilitates better model selection aligned with specific project goals.

- Decision Trees: Suitable for classification and regression tasks with interpretability as a key benefit. They handle both numerical and categorical data effectively.

- Random Forests: An ensemble of decision trees that improves accuracy and reduces overfitting, ideal for complex classification and regression problems such as credit scoring or customer segmentation.

- Support Vector Machines (SVM): Effective for high-dimensional spaces and small to medium-sized datasets, often used in text classification and image recognition.

- Neural Networks: Highly adaptable for complex pattern recognition tasks, including image processing, natural language understanding, and time-series forecasting.

- K-Means Clustering: A straightforward method for unsupervised clustering, useful for market segmentation and customer behavior analysis.

- Principal Component Analysis (PCA): A dimensionality reduction technique that simplifies data visualization and preprocessing before applying other algorithms.

Training, Validating, and Optimizing Models in an Automated Pipeline

Implementing machine learning models within an automated system involves meticulous processes to ensure robustness, accuracy, and efficiency. Proper training, validation, and optimization are essential to prevent overfitting, underfitting, and to adapt models to evolving data patterns.

- Data Partitioning: Divide datasets into training, validation, and testing subsets to evaluate model performance objectively. Automated tools can manage this process to ensure balanced splits.

- Model Training: Utilize scalable frameworks like TensorFlow, PyTorch, or scikit-learn to train models on large datasets. Automate hyperparameter tuning using grid search, random search, or Bayesian optimization methods.

- Model Validation: Apply cross-validation techniques to assess model stability across different data segments. Automate validation metrics collection, including accuracy, precision, recall, F1 score, or RMSE, depending on the task.

- Model Optimization: Fine-tune models by adjusting hyperparameters and employing feature selection methods. Use automated workflows to iteratively improve model performance without manual intervention.

Integrating Machine Learning Models into Existing Systems

Seamless integration ensures that AI models enhance operational workflows without disrupting existing infrastructure. This involves deploying trained models in a manner that supports real-time or batch processing, monitored continuously for performance and accuracy.

- Model Deployment: Convert models into production-ready formats, such as REST APIs or embedded components, using deployment tools like Docker, Kubernetes, or cloud services. Ensure compatibility with existing data pipelines.

- Automation Pipelines: Use workflow orchestration tools like Apache Airflow or Luigi to automate data ingestion, model inference, and result storage. This reduces manual efforts and increases reliability.

- Monitoring and Maintenance: Implement monitoring dashboards to track model accuracy, latency, and other key performance indicators. Automate alerts for drifts or performance degradation to trigger retraining cycles.

- Feedback Loops: Incorporate feedback from end-users or system outputs to continuously refine models. Automated retraining ensures models remain relevant as data distributions evolve over time.

Structured Comparison of AI Algorithms

Understanding the relative strengths and optimal use cases of various algorithms supports informed decision-making during model selection.

| Algorithm | Strengths | Ideal Use Cases |

|---|---|---|

| Decision Trees | Easy to interpret, handles categorical data, fast training | Basic classification, rule-based systems, feature importance analysis |

| Random Forests | High accuracy, reduces overfitting, handles large datasets | Credit scoring, fraud detection, customer segmentation |

| SVM | Effective in high-dimensional spaces, robust with small datasets | Text classification, image recognition, bioinformatics |

| Neural Networks | Powerful for complex pattern recognition, adaptable to various data types | Natural language processing, computer vision, time series forecasting |

| K-Means | Simple, fast, easy to interpret | Market segmentation, customer behavior clustering, image compression |

| PCA | Reduces dimensionality, improves visualization, speeds up processing | Data visualization, noise reduction, preprocessing for other models |

Workflow Automation and Scheduling

Efficient automation of data analysis workflows is essential for maintaining timely, accurate, and scalable insights in modern data environments. Automating data ingestion, processing, and reporting tasks ensures continuous operation with minimal manual intervention, reducing errors and increasing productivity. Proper scheduling and workflow orchestration are vital components that enable organizations to implement robust, end-to-end automated data analysis systems.

By establishing well-structured workflows and employing intelligent scheduling methods, data teams can optimize resource utilization, ensure data freshness, and maintain high levels of operational reliability. Integrating automation tools with AI-driven analysis capabilities results in faster decision-making processes, empowering organizations to respond swiftly to emerging trends or anomalies detected within their data streams.

Methods for Automating Data Ingestion, Processing, and Reporting

Automation of data workflows involves deploying a combination of tools and techniques that facilitate seamless data flow from collection to reporting. These methods are critical for maintaining data integrity, timeliness, and consistency across various stages of analysis:

- Scheduled Data Extraction: Using cron jobs, task schedulers, or workflow orchestration platforms like Apache Airflow to trigger regular data pulls from sources such as databases, APIs, or flat files.

- ETL Pipelines: Designing automated Extract, Transform, Load (ETL) pipelines that clean, transform, and load data into analytical environments without manual intervention.

- Streaming Data Processing: Implementing real-time data ingestion and processing with tools like Apache Kafka or AWS Kinesis to handle high-velocity data streams efficiently.

- Automated Reporting: Utilizing BI tools and scripting to generate dashboards, summaries, and alerts automatically based on updated data, ensuring stakeholders receive timely insights.

These methods collectively facilitate a continuous and reliable data analysis pipeline that adapts to dynamic data environments and business needs.

Procedural List for Setting Up Scheduled Automation Tasks

Establishing scheduled automation requires a systematic approach to ensure all components operate harmoniously. The following procedural steps Artikel a typical setup process:

- Identify Workflow Components: Map out data sources, processing steps, and desired outputs to understand the entire automation scope.

- Select Automation Tools: Choose suitable scheduling and orchestration tools such as Apache Airflow, Prefect, or cloud-native schedulers like AWS Lambda with EventBridge.

- Design Workflow DAGs or Pipelines: Create Directed Acyclic Graphs (DAGs) or pipeline scripts that define task dependencies, order, and data flow.

- Configure Scheduling Parameters: Set recurrence intervals (hourly, daily, weekly), time windows, and trigger conditions aligned with data availability and business requirements.

- Implement Error Handling and Notifications: Incorporate retry mechanisms, alerts, and logging to detect and respond to failures promptly.

- Test the Automation Setup: Run the workflows in a staging environment to validate timing, data accuracy, and error handling processes.

- Deploy and Monitor: Launch the scheduled tasks in production, continuously monitor performance, and adjust parameters as needed for optimal operation.

Example of an End-to-End Automation Workflow Diagram

An effective workflow diagram illustrates the entire data automation pipeline from raw data collection to final reporting. In a typical scenario, the diagram includes:

“Data sources such as databases and APIs feed into an automated ingestion process, which triggers scheduled ETL pipelines. The transformed data is stored in a data warehouse, where AI models perform analysis and generate insights. Finally, automated reports and dashboards are produced and delivered via email or BI tools to stakeholders. Monitoring and logging components oversee the process, ensuring smooth operation and error detection.”

This visualization helps teams understand each component’s role, dependencies, and timing, facilitating better management and troubleshooting of the automation system.

Best Practices for Monitoring Automated Workflows

Maintaining the integrity and performance of automated data workflows requires rigorous monitoring practices. The following best practices are recommended:

- Implement Comprehensive Logging: Capture detailed logs at each step to facilitate troubleshooting and audit trails.

- Set Alerts and Notifications: Configure real-time alerts for failures, delays, or anomalies detected in data quality or process execution, enabling swift corrective actions.

- Regular Performance Reviews: Periodically evaluate workflow execution times, error rates, and resource utilization to identify bottlenecks or inefficiencies.

- Establish Redundancy and Failover Mechanisms: Design workflows with fallback options to ensure continued operation during component failures.

- Use Visualization Dashboards: Leverage dashboards to provide at-a-glance status updates, trends, and exception reports for ongoing oversight.

- Maintain Documentation and Version Control: Keep detailed documentation of automation configurations and maintain version control to manage updates and rollbacks effectively.

Adhering to these practices ensures high system availability, data consistency, and the ability to promptly address issues, thereby safeguarding the reliability of automated data analysis processes.

Visualization and Reporting with Automated Data Analysis

Effective visualization and reporting are vital components of automated data analysis systems powered by AI. They transform complex insights into accessible formats, enabling stakeholders to make informed decisions swiftly. Automated visualization tools facilitate real-time monitoring and dynamic reporting, which are essential in today’s fast-paced business environments.

This section explores techniques for generating real-time dashboards, designing interactive visualizations, customizing reports to meet diverse stakeholder needs, and presenting key analysis results through clear, structured layouts.

Real-Time Dashboards and Visual Reports from AI-Driven Insights

Real-time dashboards aggregate AI-generated data insights into intuitive visual formats, allowing users to monitor KPIs and operational metrics continuously. These dashboards update automatically as new data flows in, providing a live snapshot of business performance and enabling prompt responses to emerging trends.

Techniques for creating such dashboards include integrating AI platforms with visualization libraries like Tableau, Power BI, or custom HTML/JavaScript interfaces. Leveraging APIs and web sockets ensures seamless data streaming, while AI models can flag anomalies and highlight key insights automatically, enhancing the relevance and timeliness of visual reports.

Designing Interactive Visualizations in HTML and Other Formats

Interactive visualizations empower users to explore data dynamically, drill down into details, and customize views according to their specific interests. Designing such visualizations involves utilizing HTML5, JavaScript, and libraries like D3.js, Chart.js, or Plotly to create engaging charts, maps, and graphs.

Effective interactive visualization design considers user experience, employing features like tooltips, filtering options, zooming, and toggling data layers. For instance, an AI-powered sales dashboard might allow sales managers to filter data by region, product category, or time frame, enabling tailored insights that support strategic decision-making.

Customizing Reports to Suit Stakeholder Needs

Automated reporting systems should be adaptable to various stakeholder requirements, providing tailored content, formats, and levels of detail. Customization can be achieved through configurable templates, user-specific dashboards, or report generation modules that select relevant KPIs and insights based on user roles.

For example, executive summaries might focus on high-level metrics and strategic insights, while operational teams receive detailed breakdowns and technical data. Incorporating options for exporting reports in formats such as PDF, Excel, or HTML ensures accessibility and ease of sharing across teams.

Sample HTML Table Layout for Presenting Key Analysis Results

Analysis Metric Value Change (%) Remarks Total Sales $2,450,000 +8.5% Compared to previous quarter Customer Retention Rate 92% +1.2% Quarter-over-quarter improvement New Product Adoption 1,200 units +15% Since launch in January Operational Efficiency Score 87/100 +3 points Based on AI model assessments

This table layout ensures that key insights are displayed clearly, with color coding or highlights used to emphasize significant changes or notable metrics, facilitating quick comprehension for decision-makers.

Challenges and Solutions in Automating Data Analysis

Automating data analysis with AI offers significant efficiencies and insights, yet it also presents a range of challenges that organizations must navigate. Understanding these obstacles and implementing effective strategies is essential for successful automation. This section explores common issues encountered during the deployment of AI-driven data analysis systems and offers practical solutions to overcome them, ensuring reliable, secure, and accurate outcomes.Automating data analysis involves complex integration of technology, processes, and data governance.

Key obstacles include data quality issues, security vulnerabilities, validation of AI-generated insights, and operational troubleshooting. Addressing these challenges proactively helps organizations maximize the benefits of AI while minimizing risks.

Common Obstacles in Automating Data Analysis

The deployment of automated data analysis systems often encounters technical, organizational, and ethical hurdles. These include inconsistent data quality, limited interpretability of AI models, resistance to change within teams, and the risk of biased or incorrect insights. In addition, integration with existing IT infrastructure can be complex, especially when legacy systems are involved.

Strategies for Ensuring Data Privacy and Security

Safeguarding sensitive data during automation is paramount. Organizations should adopt robust encryption protocols both at rest and in transit, enforce strict access controls, and regularly audit data handling processes. Implementing privacy-preserving techniques such as differential privacy and federated learning can also mitigate risks, especially when dealing with personally identifiable information or confidential data. Establishing clear compliance frameworks aligned with relevant regulations (e.g., GDPR, HIPAA) further strengthens data security.

Methods for Validating AI-Generated Insights

Validating insights generated by AI systems is critical to prevent errors and ensure decision-making accuracy. This involves cross-verifying AI outputs with traditional analytical methods, conducting sensitivity analyses, and using control datasets to benchmark model performance. Incorporating domain expertise in review processes helps interpret AI findings correctly. Additionally, deploying continuous monitoring tools can detect anomalies or deviations in real-time, prompting manual review when necessary.

Troubleshooting Tips for Common Automation Issues

Successful automation depends on timely troubleshooting of technical and process-related issues. Here are essential steps to address typical problems:

- Identify the problem scope: Determine whether the issue affects data ingestion, processing, model performance, or reporting.

- Check data integrity: Verify data quality, completeness, and consistency before analysis begins.

- Review system logs: Analyze error logs and system alerts to pinpoint failures or bottlenecks.

- Test system components: Isolate and test individual modules to ensure proper functioning.

- Update and retrain models: Regularly retrain AI algorithms with fresh data to maintain accuracy and relevance.

- Consult documentation and vendor support: Use available resources for troubleshooting guides and technical assistance.

- Implement fallback procedures: Have manual processes or alternative workflows ready to ensure continuity during system downtime.

Addressing these challenges systematically enhances the robustness and reliability of automated data analysis systems, enabling organizations to derive valuable insights with confidence.

Future Trends in AI-Driven Data Analysis Automation

As the landscape of data analysis continues to evolve rapidly, emerging AI technologies are playing a pivotal role in shaping the future of automated data workflows. These advancements promise to enhance efficiency, accuracy, and the depth of insights achievable through automated systems. Understanding these trends enables organizations to stay ahead of the curve and leverage innovative tools for strategic decision-making.

In this segment, we explore the cutting-edge AI innovations that are set to redefine data analysis automation, analyze their potential impacts on existing workflows, and provide a forecast of emerging practices and technologies that will further accelerate this transformation.

Emerging AI Technologies Enhancing Automation Capabilities

Recent breakthroughs in AI, including advancements in machine learning, deep learning, natural language processing (NLP), and reinforcement learning, are significantly expanding the scope and sophistication of automated data analysis. These technologies enable systems to interpret unstructured data, generate deeper insights, and adapt dynamically to evolving datasets.

Generative AI models like GPT-4 and beyond are increasingly capable of synthesizing complex patterns and providing predictive insights, making it possible to automate not just analysis but also hypothesis generation and scenario testing.

Additionally, developments in explainable AI (XAI) are addressing transparency concerns, allowing users to understand how automated insights are derived. This fosters trust and facilitates broader adoption within enterprise environments.

Impact of Advancements on Data Analysis Workflows

As AI technologies become more advanced, data analysis workflows will experience increased automation at every stage—from data collection and preprocessing to insight generation and reporting. This shift reduces manual effort, accelerates decision-making processes, and minimizes human error.

Moreover, real-time data processing enabled by AI accelerates the ability to respond to dynamic market conditions, detect anomalies promptly, and refine models continuously through active learning. These improvements foster more agile and resilient data ecosystems, supporting strategic initiatives with timely and accurate insights.

Potential Innovations and Best Practices

Emerging innovations in AI-driven data analysis are poised to introduce new capabilities and methodologies. Organizations adopting these trends can benefit from increased competitiveness and operational efficiency.

- Integration of Edge AI: Deploying AI models on edge devices to facilitate real-time analysis directly at data sources, reducing latency and bandwidth dependency.

- Hybrid Human-AI Collaboration: Combining human expertise with AI automation to enhance accuracy, interpretability, and contextual relevance of insights.

- Automated Data Governance: Utilizing AI to ensure data quality, compliance, and security through continuous monitoring and automated policy enforcement.

- Self-Optimizing Systems: Developing AI models that can self-tune and adapt based on evolving data patterns without extensive manual reprogramming.

- Advanced Synthetic Data Generation: Creating high-fidelity synthetic datasets to augment training processes and address data scarcity or privacy concerns.

Comparative Table of Traditional vs. AI-Automated Data Analysis Approaches

| Aspect | Traditional Data Analysis | AI-Automated Data Analysis |

|---|---|---|

| Data Processing | Manual data cleaning and preprocessing, often time-consuming and error-prone. | Automated preprocessing using AI algorithms that adapt to data variations and improve over time. |

| Insight Generation | Manual interpretation based on predefined models or heuristics, limited scalability. | AI-driven pattern recognition and predictive modeling enable scalable and deeper insights. |

| Speed | Slower, dependent on human resources and manual steps. | Rapid, often real-time, analysis powered by AI algorithms processing large datasets simultaneously. |

| Accuracy | Variable, influenced by human expertise and potential biases. | High, with continuous learning and model refinement reducing errors and bias over time. |

| Adaptability | Limited flexibility; requires manual updates for new data types or patterns. | High adaptability through autonomous model retraining and dynamic workflow adjustments. |

| Transparency | High, as analyses are based on explicit, human-understood methods. | Varies; efforts in explainable AI are improving transparency but still developing. |

Conclusion

In summary, automating data analysis with AI offers significant advantages in efficiency, precision, and scalability. Staying updated with emerging trends and best practices ensures that organizations can maximize the potential of AI-powered insights, paving the way for innovative and data-driven success.