Discovering similarities between research papers is a vital aspect of academic integrity and innovative scholarship. Leveraging artificial intelligence offers a cutting-edge approach to efficiently and accurately identify overlapping ideas and content across vast datasets. As manual comparisons become increasingly impractical due to the exponential growth of research publications, AI-driven techniques emerge as essential tools to streamline this process and unveil meaningful connections.

This overview explores the role of artificial intelligence in detecting textual and conceptual overlaps, highlighting key methods such as natural language processing and machine learning. It also delves into the practical steps for implementing these technologies, evaluating their effectiveness, and addressing ethical considerations to ensure fair and unbiased research assessments.

Introduction to AI in detecting similarities in research papers

Artificial Intelligence (AI) has revolutionized the way we analyze and interpret vast amounts of textual data, particularly in the academic and research domains. Its capacity to identify overlaps, similarities, and conceptual connections across numerous research documents has become an invaluable tool for researchers, publishers, and academic institutions. By leveraging sophisticated algorithms, AI facilitates the efficient detection of duplicated content, plagiarized sections, and closely related studies, thus enhancing the integrity and originality of scholarly work.

Manual detection of similarities in research papers is often labor-intensive, time-consuming, and susceptible to human error. The sheer volume of published research makes it impractical to rely solely on human judgment, especially when it comes to identifying nuanced conceptual overlaps or paraphrased content. AI offers comprehensive solutions to these challenges through advanced computational techniques that analyze both linguistic features and underlying ideas present within documents.

This technological approach not only accelerates the review process but also improves accuracy in recognizing subtle similarities that may evade traditional manual methods.

Common AI Techniques for Similarity Detection

To effectively identify textual and conceptual overlaps in research papers, several AI techniques are employed, each contributing unique strengths to the process. Understanding these methods helps in appreciating how AI systems perform complex similarity assessments with high precision.

| Technique | Description | Application in Similarity Detection |

|---|---|---|

| Natural Language Processing (NLP) | Enables computers to understand, interpret, and generate human language by analyzing syntax, semantics, and context. | NLP algorithms extract key phrases, identify paraphrasing, and evaluate semantic content to find overlaps. |

| Vector Space Models | Represent textual data as mathematical vectors, capturing the contextual meaning of words and phrases. | Compare document vectors to quantify similarity scores, assisting in identifying related research works. |

| Machine Learning Algorithms | Use training data to develop models that can classify or predict similarity based on learned patterns. | Supervised models can be trained on labeled examples of similar and dissimilar papers, improving detection accuracy over time. |

| Deep Learning Techniques | Employ neural networks such as transformers that understand complex language structures and contextual nuances. | Models like BERT or RoBERTa analyze entire documents for deep semantic similarity, even when phrased differently. |

Effective similarity detection combines linguistic understanding with pattern recognition, enabling AI systems to surpass traditional matching approaches and uncover nuanced overlaps across research literature.

Key AI Techniques for Similarity Detection

Accurately detecting similarities in research papers is crucial for avoiding duplication, ensuring originality, and facilitating efficient literature reviews. AI techniques leverage advanced computational methods to analyze textual content deeply, capturing nuanced semantic relationships beyond mere surface-level comparisons. These methods encompass a variety of approaches that transform textual data into mathematical representations, enabling precise similarity assessments.

Understanding the core AI techniques—such as semantic analysis, vector space models, and neural embeddings—is essential for selecting appropriate tools for research paper comparison. Additionally, evaluating the effectiveness of algorithms like cosine similarity, Jaccard index, and transformer-based models helps in optimizing the detection process. Each method offers specific strengths and faces particular limitations, making it important to understand their operational mechanisms and suitable application scenarios.

Semantic Analysis, Vector Space Models, and Neural Embeddings

Semantic analysis involves extracting meaning from textual data by understanding the context, concepts, and relationships within research papers. Techniques such as natural language processing (NLP) enable AI systems to interpret the intent and thematic content, which are crucial for meaningful similarity detection. Vector space models (VSM) convert text into vectors within a multidimensional space, where proximity indicates semantic similarity. These models include methods like term frequency-inverse document frequency (TF-IDF) and Latent Semantic Analysis (LSA).

Neural embeddings, such as word2vec, GloVe, and more advanced transformer-based embeddings like BERT, encode words, sentences, or entire documents into dense vectors capturing contextual meaning. These embeddings preserve semantic relationships more effectively than traditional models, allowing for nuanced comparisons even when terminology differs. For instance, embeddings can recognize that “AI” and “artificial intelligence” refer to similar concepts despite lexical differences.

| Method | Description | Advantages | Limitations |

|---|---|---|---|

| Cosine Similarity | Measures the cosine of the angle between two vectors, indicating their directional similarity within a vector space. | Efficient and easy to compute; effective with high-dimensional embeddings; widely used in text similarity tasks. | Insensitive to vector magnitude; can be less effective with sparse vectors or very short texts. |

| Jaccard Index | Calculates the similarity as the size of the intersection divided by the size of the union of two sets, often applied to token sets. | Simple to implement; effective for comparing sets of s or features. | Does not consider word order or semantic relationships; less effective for dense textual data. |

| Transformer-based Models (e.g., BERT) | Utilize deep neural networks to generate contextualized embeddings that capture complex language patterns and semantics. | Highly accurate; considers context and polysemy; excels at capturing nuanced semantic similarities. | Computationally intensive; requires substantial training data and resources. |

Data Preprocessing for Effective Similarity Detection

Enhancing the accuracy of similarity detection in research papers relies heavily on meticulous data preprocessing. This step ensures that the raw textual data is transformed into a format that AI algorithms can efficiently analyze, reducing noise and highlighting meaningful patterns. Proper preprocessing is fundamental to achieving reliable and consistent results, particularly when dealing with diverse document formats and writing styles inherent in research literature.

Effective preprocessing involves cleaning the textual data, standardizing formats, and extracting relevant sections that carry the most significant information for similarity assessment. This process not only improves computational efficiency but also enhances the quality of insights derived from AI techniques, enabling more precise identification of overlaps, similarities, and potential instances of plagiarism or related work.

Procedures for Cleaning and Preparing Research Papers

Preparing research papers for similarity analysis requires a systematic approach to clean and normalize the data. The following procedures are essential:

- Tokenization: Breaking down the text into smaller units such as words or phrases, which facilitates detailed analysis of language patterns and helps in identifying commonalities between documents.

- Stemming and Lemmatization: Reducing words to their root forms (e.g., ‘running’, ‘ran’ to ‘run’) to unify different morphological variants, thereby improving matching accuracy across varied expressions.

- Normalization: Converting all text to a consistent format by converting to lowercase, removing punctuation, special characters, and stopwords, which are common words with little semantic value, such as ‘the’, ‘is’, or ‘and’.

- Removing Noise: Eliminating irrelevant content like references, author names, and formatting artifacts that do not contribute to the core scientific content.

Extracting Relevant Sections for Analysis

Research papers contain various sections, each with different levels of relevance for similarity detection. Focusing on specific parts such as abstracts, introductions, and conclusions often yields the most meaningful comparison points, as these sections summarize the core contributions and findings of the work.

Extraction involves identifying and isolating these sections while discarding less pertinent parts like references, appendices, and footnotes. This targeted approach enhances the efficiency and effectiveness of AI-based similarity detection by concentrating on the most informative segments of the documents.

Below are the steps to prepare datasets for AI processing:

- Identify the structural components of research papers, such as headers and section titles, to locate abstracts, introductions, and conclusions.

- Use natural language processing (NLP) tools or custom scripts to extract these sections automatically, ensuring consistency across large datasets.

- Convert the extracted sections into plain text, removing formatting artifacts that may interfere with analysis.

- Organize the cleaned sections into a structured dataset, labeling each segment with metadata such as paper ID, section type, and publication year.

- Apply tokenization, stemming, and normalization techniques to each section to prepare the data for AI algorithms.

Implementing AI Models to Identify Similarities

Transforming theoretical approaches into practical applications necessitates a systematic implementation of AI models tailored for research paper similarity detection. This process involves designing effective workflows that facilitate the training, validation, and deployment of machine learning algorithms, enabling accurate and efficient comparison of research documents. Leveraging pre-trained models like BERT and SciBERT enhances the semantic understanding capabilities, capturing nuanced similarities that traditional methods might overlook.

Implementing such models requires a blend of data preparation, model configuration, and evaluation steps to ensure optimal performance. The integration of these models allows institutions, researchers, and publishers to automate plagiarism checks, literature reviews, and content recommendation systems, streamlining workflows and elevating the quality of research management.

Designing Workflows to Train Machine Learning Models on Research Paper Datasets

Developing a robust workflow for training AI models begins with establishing a clear pipeline that manages data ingestion, preprocessing, model training, validation, and deployment. The workflow typically comprises the following key stages:

- Data Collection: Gather a diverse and representative dataset of research papers, including abstracts, full texts, and metadata. Sources may include open access repositories, institutional databases, or proprietary collections.

- Data Preprocessing: Clean and normalize textual data by removing noise, tokenizing, and converting text to lowercase. Create labeled pairs indicating whether documents are similar or dissimilar based on predefined criteria.

- Feature Extraction: Utilize tokenization and encoding techniques compatible with transformer models, such as WordPiece tokenization used in BERT.

- Model Selection and Configuration: Choose appropriate AI architectures, typically transformer-based models, and configure hyperparameters like learning rate, batch size, and number of epochs.

- Training and Validation: Split data into training, validation, and test sets. Monitor model performance using metrics like cosine similarity scores, accuracy, and F1 scores, adjusting hyperparameters as needed.

- Deployment: Integrate the trained model into a system capable of real-time or batch similarity assessment, providing actionable outputs for end-users.

Implementing this workflow ensures a structured approach to developing reliable AI models capable of discerning subtle similarities across diverse research domains.

Using Pre-Trained Models Like BERT or SciBERT for Semantic Comparison

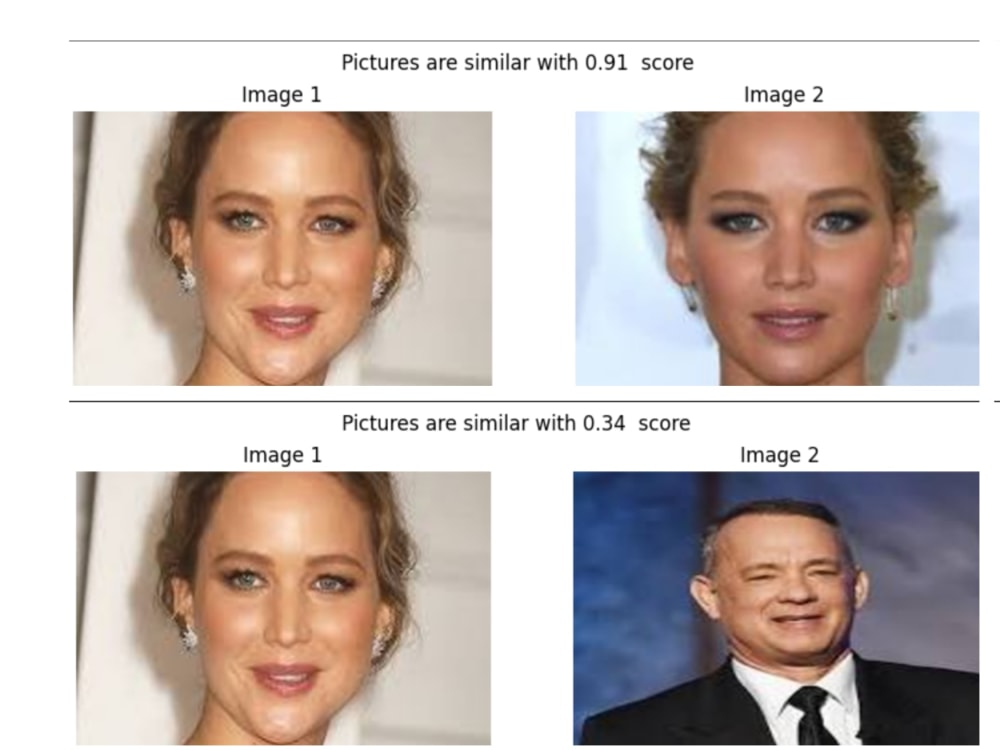

Pre-trained language models such as BERT and SciBERT serve as powerful foundations for semantic similarity detection, owing to their extensive training on large corpora and capacity to understand contextual nuances. SciBERT, specifically tailored for scientific literature, enhances the interpretative accuracy when comparing research papers in technical fields.

These models encode textual input into dense vector representations—embeddings—that capture semantic meanings. Comparing these embeddings through similarity metrics like cosine similarity enables the identification of closely related documents, even when phrased differently.

Applying pre-trained models involves fine-tuning on domain-specific datasets to improve relevance and accuracy for particular scientific disciplines. This transfer learning approach minimizes training time and resource requirements while maximizing semantic understanding.

For example, encoding abstracts with SciBERT produces embeddings that, when compared, reveal high similarity scores for related studies, facilitating tasks such as literature review automation and plagiarism detection.

Sample Pseudocode for Model Training and Inference

Below is a structured Artikel illustrating the process of fine-tuning a transformer model like SciBERT for research paper similarity detection:

# Load pre-trained SciBERT model and tokenizer

model = load_model('allenai/scibert_scivocab_uncased')

tokenizer = load_tokenizer('allenai/scibert_scivocab_uncased')

# Prepare dataset of paper pairs with similarity labels

dataset = load_dataset('research_paper_pairs')

# Define training parameters

optimizer = setup_optimizer(model.parameters(), learning_rate=2e-5)

loss_function = contrastive_loss_function()

# Training loop

for epoch in range(NUM_EPOCHS):

for batch in dataset:

# Tokenize input pairs

input_ids_A = tokenizer(batch.paper_A, padding=True, truncation=True)

input_ids_B = tokenizer(batch.paper_B, padding=True, truncation=True)

labels = batch.labels # 1 for similar, 0 for dissimilar

# Encode papers

embeddings_A = model(input_ids_A)

embeddings_B = model(input_ids_B)

# Compute similarity scores

similarity_scores = cosine_similarity(embeddings_A, embeddings_B)

# Calculate loss

loss = loss_function(similarity_scores, labels)

# Backpropagation and optimization

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Inference on new paper pair

def compute_similarity(paper_text1, paper_text2):

tokens1 = tokenizer(paper_text1, return_tensors='pt')

tokens2 = tokenizer(paper_text2, return_tensors='pt')

embedding1 = model(tokens1).last_hidden_state.mean(dim=1)

embedding2 = model(tokens2).last_hidden_state.mean(dim=1)

similarity = cosine_similarity(embedding1, embedding2)

return similarity.item()

This pseudocode demonstrates the core steps involved in leveraging pre-trained models for semantic similarity tasks, emphasizing the importance of data preparation, model fine-tuning, and inference processes crucial for effective research paper comparison.

Evaluating AI-based similarity detection

Assessing the performance of AI models in detecting similarities within research papers is crucial to ensure their reliability, effectiveness, and applicability in real-world scenarios. Proper evaluation not only helps in refining the models but also provides confidence to researchers and institutions relying on these tools for academic integrity, plagiarism detection, and literature review processes. Establishing well-defined metrics and validation methods enables a comprehensive understanding of the AI system’s strengths and limitations in similarity identification tasks.

In this section, the focus is on establishing evaluation criteria that measure the accuracy, precision, and recall of AI models used for similarity detection. Additionally, it discusses methods for validating AI outputs through manual reviews and benchmarking against trusted datasets. A comparative table illustrates the significance, advantages, and limitations of various evaluation metrics, providing a clear framework for assessing model performance effectively.

Criteria for Assessing Accuracy, Precision, and Recall

Effective evaluation of AI models relies on well-defined criteria that reflect their ability to correctly identify similar and dissimilar research papers. Accuracy, precision, and recall are fundamental metrics used in this context, each offering unique insights into the model’s performance.

- Accuracy: Represents the proportion of correctly identified pairs—both similar and dissimilar—out of the total pairs assessed. It provides a general overview of the model’s correctness but can be misleading if the dataset is imbalanced.

- Precision: Measures the proportion of pairs classified as similar that are genuinely similar. High precision indicates that when the AI model labels a pair as similar, it is highly reliable, minimizing false positives.

- Recall: Indicates the proportion of actual similar pairs that the model correctly detects. High recall ensures that most true similarities are captured, reducing false negatives.

Combining these metrics gives a comprehensive view of the model’s ability to discern true similarities accurately and efficiently, which is vital in research environments where precision and completeness are equally important.

Validating AI Outputs Through Manual Review and Benchmark Datasets

While quantitative metrics provide measurable insights, validating AI outputs often requires qualitative assessment to ensure contextual relevance and accuracy. Manual review involves domain experts examining a subset of the model’s predicted similar pairs to verify correctness, especially in nuanced cases where automated metrics may fall short.

In addition, benchmarking against established datasets enhances validation processes by comparing AI performance with predefined ground truths. Datasets such as the PAN Plagiarism Corpus or similar collections of research papers with annotated similarities serve as reliable standards. These benchmarks enable developers to identify model weaknesses, adjust parameters, and improve overall performance.

“A balanced approach that combines quantitative evaluation with expert manual review ensures the robustness and reliability of AI-based similarity detection systems.”

Regular validation against benchmark datasets and expert scrutiny helps maintain high standards, prevents overfitting to specific data patterns, and supports continuous improvement of AI models in research paper analysis.

Comparison of Evaluation Metrics and Their Significance

| Metric | Description | Significance | Advantages | Limitations |

|---|---|---|---|---|

| Accuracy | Proportion of correctly classified pairs (both similar and dissimilar). | Provides overall correctness of the model. | Simple to calculate and interpret. | Can be misleading in imbalanced datasets where one class dominates. |

| Precision | Proportion of true positive similar pairs out of all pairs classified as similar. | Assesses the reliability of positive similarity predictions. | Reduces false positives, crucial when false alarms are costly. | May overlook many true similar pairs if recall is low. |

| Recall | Proportion of actual similar pairs correctly identified by the model. | Measures the model’s ability to detect most true similarities. | Important in contexts where missing true similarities is critical. | Can lead to higher false positive rates if precision drops. |

Choosing appropriate evaluation metrics depends on the specific objectives of the similarity detection task. For instance, in academic integrity applications, high recall might be prioritized to ensure no plagiarized work goes unnoticed, whereas in curated literature reviews, high precision might be more desirable to prevent false identifications.

Practical Applications and Tools

In the realm of academic research, the ability to efficiently identify similarities between research papers is crucial for maintaining originality, avoiding unintentional plagiarism, and streamlining literature reviews. To facilitate these objectives, a variety of AI-powered tools and platforms have emerged, offering researchers sophisticated capabilities for similarity detection. Integrating these tools into existing research workflows enhances their effectiveness, ensures consistency, and accelerates the process of scholarly evaluation.

Understanding the practical applications of AI in this context helps researchers and institutions leverage cutting-edge technology to improve quality assurance, detect overlaps in scientific publications, and promote academic integrity. The following sections detail some of the most prominent AI tools available today, the procedures for seamless integration into research environments, and essential steps for deploying AI models in real-world scenarios.

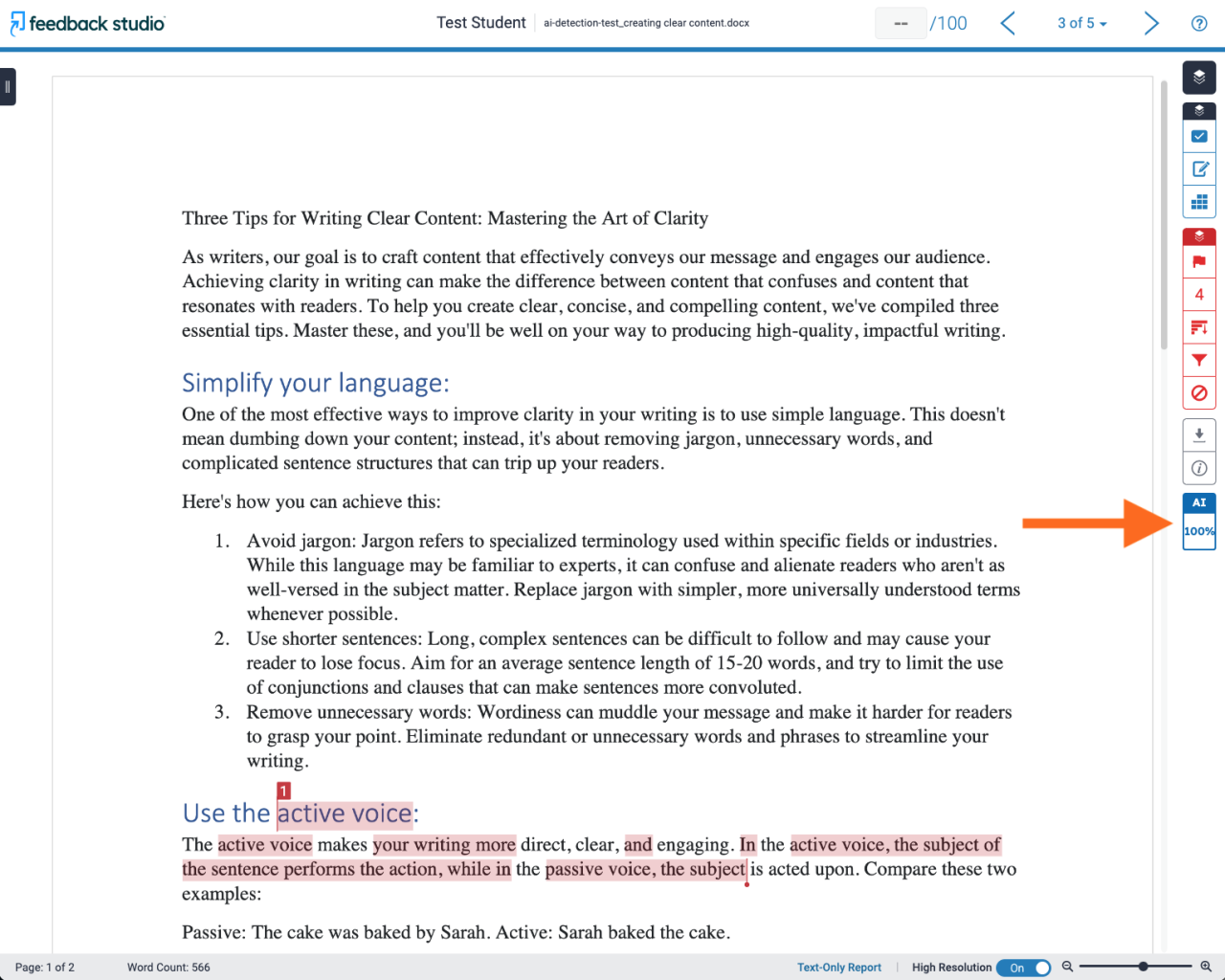

Existing AI-powered Tools and Platforms for Similarity Detection

Several advanced platforms utilize artificial intelligence to facilitate the detection of similarities in research papers, each tailored to meet specific needs of academic institutions, publishers, and individual researchers. These tools combine natural language processing, machine learning algorithms, and large-scale databases to deliver accurate and scalable solutions.

- Turnitin: Widely used in educational institutions, Turnitin leverages AI to compare submitted manuscripts against extensive repositories of academic papers, internet sources, and previous submissions. Its AI-driven algorithms identify potential overlaps and provide detailed similarity reports.

- iThenticate: Primarily aimed at publishers and researchers, iThenticate utilizes sophisticated AI models to scrutinize scholarly manuscripts, detecting similarities with a vast database of published literature across disciplines. It offers customizable similarity thresholds and detailed analysis tools.

- CrossRef Similarity Check: Powered by iThenticate, this platform is integrated into the publishing workflow. It allows publishers to verify the originality of manuscripts before publication, utilizing advanced AI algorithms for precise similarity detection.

- Grammarly and Turnitin’s AI features: While primarily known for grammar checking, these platforms incorporate AI modules capable of detecting paraphrased content and similarities, especially useful for preliminary screening.

- Custom AI Solutions: Several institutions develop proprietary AI models tailored to their specific research domains and datasets. These often involve training deep learning models like BERT, RoBERTa, or SciBERT on relevant corpora for optimized similarity detection.

These tools are typically accessible via web-based interfaces or API integrations, enabling seamless incorporation into existing research workflows or plagiarism detection systems.

Procedures for Integrating AI Models into Research Workflows or Plagiarism Detection Systems

Effective integration of AI-based similarity detection tools requires strategic planning and systematic implementation. The goal is to embed these solutions within research processes to automate checks, streamline reviews, and enhance accuracy.

- Assess Requirements and Compatibility: Identify the specific needs of your research environment, such as the scope, scale, and desired accuracy. Verify compatibility with existing systems, whether they are manuscript submission platforms, learning management systems, or custom research databases.

- Choose Appropriate AI Tools or Develop Custom Models: Select from available tools or develop bespoke models based on your domain-specific datasets. Consider factors like API availability, scalability, and support for your institution’s infrastructure.

- Prepare and Preprocess Data: Standardize document formats, remove irrelevant information, and organize datasets for training or comparison. Proper data preprocessing enhances model performance and detection accuracy.

- Integrate via API or Embedded Modules: Utilize APIs, SDKs, or plugin modules to connect AI tools with your existing workflows. For custom models, embed inference scripts into research management systems or plagiarism detection pipelines.

- Test and Validate: Conduct pilot tests using a representative sample of research papers. Fine-tune thresholds and parameters based on initial results to optimize sensitivity and specificity.

- Automate and Monitor: Enable automated checks during manuscript submission or research review stages. Continuously monitor performance, update models with new data, and refine detection parameters as needed.

Steps to Deploy AI Models in Real-World Scenarios

Deploying AI models for similarity detection in practical environments involves a series of systematic steps to ensure reliability, scalability, and ease of use. Below are essential steps to facilitate a successful deployment:

- Define Deployment Objectives: Clarify whether the focus is on pre-publication plagiarism checks, literature review assistance, or ongoing research monitoring. Objectives influence model selection and system architecture.

- Prepare Infrastructure and Resources: Ensure access to necessary computational resources, such as cloud services or dedicated servers, capable of handling large-scale document processing.

- Implement Data Security and Privacy Measures: Establish protocols to secure sensitive research data, comply with institutional policies, and address privacy concerns, especially when handling unpublished manuscripts.

- Integrate with Existing Platforms: Connect AI models with current research management systems or repositories through APIs, plugins, or custom interfaces. Ensure seamless data flow and user accessibility.

- Train and Calibrate the Models: Fine-tune models using domain-specific datasets, and calibrate similarity thresholds to balance false positives and negatives effectively.

- Conduct Pilot Runs and Gather Feedback: Deploy initial versions in controlled environments, gather user feedback, and identify areas for improvement.

- Scale Deployment and Maintain: Expand the deployment to full-scale systems, implement routine updates, and establish monitoring dashboards for ongoing performance evaluation.

Successful deployment hinges on continuous evaluation and adaptation of AI models to evolving research landscapes and data sources, ensuring sustained accuracy and utility in academic settings.

Ethical considerations and limitations

The integration of AI techniques in the detection of similarities within research papers presents significant ethical challenges and inherent limitations. As AI models become more sophisticated in analyzing complex textual data, it is crucial to address potential biases, privacy issues, and the overall fairness of the systems employed. Recognizing and mitigating these concerns ensures that AI tools serve the research community responsibly and equitably, fostering trust and integrity in scholarly assessments.AI models, when trained on datasets that lack diversity or contain biased information, risk perpetuating existing prejudices.

These biases can skew similarity detection results, unfairly favoring certain research outputs over others, and may inadvertently marginalize minority perspectives or underrepresented fields. Such biases could influence critical decisions like grant allocations, publication acceptances, or academic evaluations, thereby impacting the fairness of research assessment processes.Privacy concerns emerge prominently when handling sensitive research data, particularly in fields involving proprietary information, confidential methodologies, or unpublished results.

Unauthorized access or inadequate data protection measures can lead to breaches, compromising research integrity and individual privacy. It is imperative to implement strict data governance policies and anonymization techniques to safeguard information throughout the similarity detection process.To address these ethical considerations, best practices include adopting transparent AI models whose decision-making processes can be audited, continuously monitoring for bias, and involving human oversight to validate AI outputs.

Maintaining a balanced dataset that reflects diverse research themes and methodologies helps reduce bias. Additionally, employing robust data encryption, anonymization, and secure storage practices ensures privacy is upheld. Establishing clear guidelines and accountability frameworks guides developers and users in deploying AI tools ethically, ultimately promoting fairness and trustworthiness in research evaluations.

Closure

In conclusion, the integration of AI in similarity detection significantly enhances the accuracy and efficiency of research analysis. By adopting these advanced methods, researchers and institutions can uphold academic integrity, foster originality, and facilitate more insightful scholarly collaborations. As technology continues to evolve, embracing AI-driven solutions will remain a cornerstone of responsible and innovative research practices.