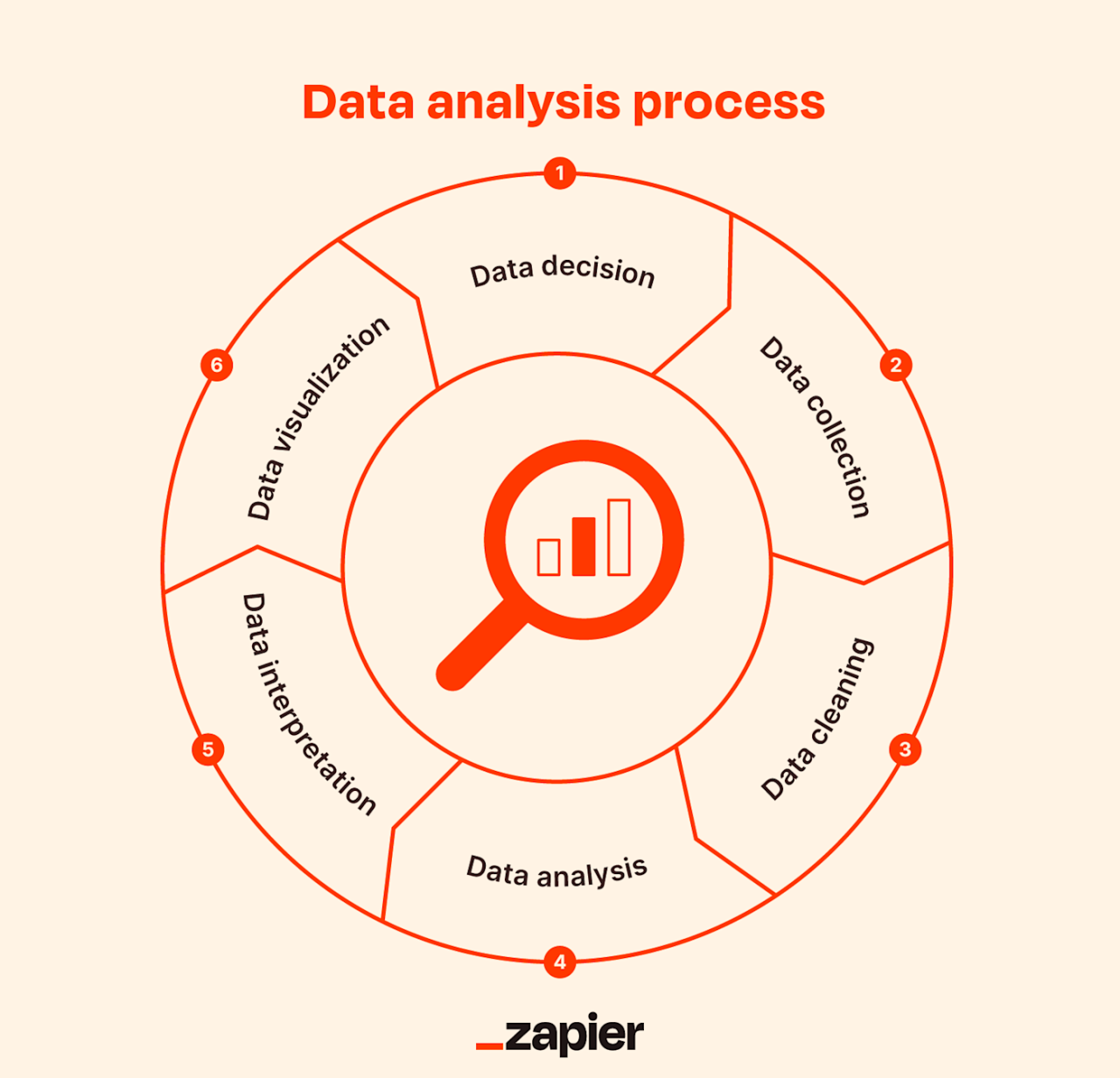

Understanding how to analyze interview transcripts with AI opens new avenues for extracting valuable insights efficiently. Leveraging artificial intelligence enables organizations and researchers to process large volumes of interview data with speed and accuracy that surpasses manual methods. This approach not only saves time but also enhances the depth of analysis, revealing patterns, sentiments, and key themes that might otherwise remain hidden.

From preparing transcripts for optimal processing to employing advanced natural language processing techniques, mastering how to analyze interview transcripts with AI can significantly improve decision-making, research quality, and overall data interpretation. This guide provides a comprehensive overview of the essential steps and best practices to harness AI’s potential in transcript analysis.

Understanding the Fundamentals of Analyzing Interview Transcripts with AI

Analyzing interview transcripts with artificial intelligence (AI) involves leveraging advanced computational techniques to interpret and extract meaningful insights from large volumes of qualitative data. This approach transforms traditionally manual processes into efficient, scalable solutions that facilitate deeper understanding of interview content, sentiments, and underlying themes. Grasping these fundamental principles is crucial for anyone aiming to utilize AI effectively in qualitative research, HR assessments, or market analysis.

At its core, AI-driven transcript analysis hinges on natural language processing (NLP) and machine learning algorithms. These technologies enable computers to comprehend human language, identify patterns, and classify data according to predefined criteria. Automation of this process not only accelerates data handling but also enhances accuracy and consistency, minimizing human biases and errors. As a result, organizations can process thousands of interview transcripts swiftly, unlocking valuable insights that inform decision-making and strategic planning.

Core Principles of AI-Based Transcript Analysis

Understanding the core principles underlying AI analysis of interview transcripts involves recognizing key technological components and methodological approaches that make this possible. These include:

- Natural Language Processing (NLP): The foundation of AI transcript analysis, NLP encompasses tasks such as tokenization, part-of-speech tagging, entity recognition, and syntactic parsing. These processes enable the AI to understand sentence structures, identify key entities (e.g., names, dates, organizations), and interpret context.

- Sentiment Analysis: This technique assesses the emotional tone behind textual data, determining whether responses are positive, negative, or neutral. It helps gauge participant attitudes and opinions, which are often vital in market research or employee feedback studies.

- Topic Modeling: Algorithms like Latent Dirichlet Allocation (LDA) identify prevalent themes or topics within transcripts. This facilitates the identification of recurring ideas, concerns, or interests expressed during interviews.

- Classification and Clustering: Machine learning models categorize responses into predefined categories or cluster similar responses together. This enables segmentation of data for more targeted analysis.

Benefits of Automating Interview Data Processing

Automation through AI offers significant advantages over manual transcript analysis, particularly in handling large datasets. These benefits include:

- Enhanced Efficiency: AI drastically reduces the time required to analyze extensive interview collections, transforming multi-hour tasks into minutes or seconds.

- Improved Consistency: Automated processes eliminate subjective biases introduced by human analysts, ensuring uniformity in data interpretation across different transcripts.

- Deeper Insights: AI techniques uncover subtle patterns, sentiments, and themes that might be overlooked during manual review, leading to richer, more nuanced understanding.

- Cost-Effectiveness: Reducing the need for extensive human labor makes large-scale transcript analysis more economical, freeing resources for other strategic activities.

Types of Insights Extracted Using AI from Transcripts

AI-powered analysis unlocks a variety of insights that are invaluable for research and decision-making. These insights include:

- Sentiment Trends: Tracking changes in participant attitudes over time or across different interview groups helps assess overall satisfaction, engagement, or concern levels.

- Key Themes and Topics: Identifying dominant themes within responses guides understanding of participant priorities, challenges, or interests.

- Emotion and Tone Analysis: Beyond basic sentiment, AI can detect specific emotions such as frustration, enthusiasm, or confusion, providing a more detailed emotional profile.

- Response Patterns and Behavioral Indicators: Recognizing repeated phrases or response structures can reveal underlying motivations or biases, aiding in behavioral insights.

- Predictive Insights: In some cases, AI analysis can forecast future trends based on current data, such as potential customer churn or employee retention risks, based on interview cues.

Utilizing AI for interview transcript analysis transforms qualitative data into actionable intelligence, enabling organizations to make informed decisions swiftly and accurately.

Preparing Interview Transcripts for AI Processing

Proper preparation of interview transcripts is a crucial step to ensure effective and accurate analysis using AI tools. This stage involves cleaning, organizing, and annotating the data to facilitate meaningful insights. Well-structured transcripts enable AI algorithms to interpret and extract valuable information efficiently, reducing errors and improving overall analysis quality.

By investing time in preparing transcripts appropriately, researchers and analysts can leverage AI capabilities to identify patterns, sentiments, and themes within interview data. This process enhances the reliability of the insights generated, providing a solid foundation for decision-making, reporting, and further research.

Cleaning and Formatting Transcripts for Optimal Analysis

Cleaning and formatting are essential for transforming raw interview data into a standardized, machine-readable format. This process involves multiple steps aimed at removing inconsistencies and ensuring clarity.

- Remove irrelevant content: Eliminate filler words, pauses, and non-essential conversation segments such as background noise descriptions or interruptions that do not contribute to the core data.

- Correct transcription errors: Manually review transcripts for misheard words or typographical mistakes, especially in automated transcriptions, to prevent misinterpretations by AI algorithms.

- Standardize formatting: Use consistent spelling, punctuation, and capitalization. Maintain uniform styles for speakers’ labels and timestamps if used.

- Normalize language: Convert contractions, abbreviations, and colloquial expressions into their formal or standardized equivalents to improve analysis clarity.

- Segment transcripts: Break lengthy transcripts into manageable sections or chunks based on topics or speakers to facilitate focused analysis.

Organizing Transcripts into Structured Formats

Structured data formats enable AI models to interpret interview content systematically. Organizing transcripts into tables, JSON, or similar formats enhances data accessibility and analysis precision.

Structured organization involves converting the unstructured transcript into a format where each segment is clearly identifiable. For example, JSON files can encapsulate speaker labels, timestamps, and spoken content within nested objects, allowing precise querying and filtering.

| Example of JSON Structure |

|---|

"interview": [

"speaker": "Interviewer",

"timestamp": "00:00:05",

"text": "Can you describe your experience with our product?"

,

"speaker": "Respondent",

"timestamp": "00:00:12",

"text": "Certainly, I have used it for over a year, and it has significantly improved my workflow."

]

|

Using such structured formats facilitates targeted analysis, such as extracting all responses from a specific speaker or analyzing responses within a particular timeframe.

Annotating or Tagging Key Sections within Transcripts

Annotation and tagging enhance the interpretability of transcripts by highlighting key sections, themes, or sentiments. This process involves marking specific parts of the transcript to denote their significance, making subsequent analysis more focused and efficient.

- Identify themes or topics: Tag sections where specific topics are discussed, such as product features, customer satisfaction, or challenges faced.

- Mark sentiment shifts: Use tags to denote positive, negative, or neutral sentiments expressed in particular segments, aiding sentiment analysis.

- Highlight key statements or quotes: Tag important responses, recommendations, or insights that can be used for reporting or case studies.

- Utilize standardized tags: Develop a tagging schema for consistency, such as <theme:pricing> or <issue:delivery>.

- Leverage annotation tools: Employ software that facilitates tagging directly within transcripts, enabling easier management and export of annotated data for AI processing.

Effective annotation ensures that critical information is not overlooked during analysis and provides context that enhances the AI’s ability to interpret data accurately. It also streamlines the process of filtering relevant data points, making insights more actionable and timely.

Techniques for Applying AI to Transcripts

Implementing AI effectively into the analysis of interview transcripts requires a strategic approach to leverage natural language processing (NLP) tools. These techniques enable researchers and analysts to derive meaningful insights from large volumes of qualitative data, streamlining processes that traditionally demanded significant manual effort. Mastering these methods enhances the quality, accuracy, and depth of transcript analysis, facilitating comprehensive understanding of interview content across diverse contexts.

Applying AI to transcripts involves various sophisticated techniques designed to segment, interpret, and synthesize textual data. These methods include content segmentation for logical division, automatic extraction of themes and sentiments, as well as pattern recognition across multiple transcripts. The following sections detail practical strategies and templates that can be adopted to optimize AI-driven transcript analysis for research, market insights, or organizational feedback.

Utilizing Natural Language Processing Tools for Content Segmentation

Effective content segmentation is foundational for meaningful transcript analysis. NLP tools can automatically divide transcripts into logical units—such as topics, speaker turns, or contextual segments—based on linguistic cues or predefined markers. This process facilitates targeted analysis and enhances interpretability. Techniques include:

- Sentence and paragraph boundary detection: Utilizing algorithms that identify punctuation cues, conjunctions, or discourse markers to segment text into coherent units.

- Topic modeling algorithms: Implementing tools like Latent Dirichlet Allocation (LDA) to group related sentences or paragraphs into thematic clusters, enabling quick identification of dominant conversation themes.

- Speaker diarization: Separating contributions from different speakers within a transcript, which is especially valuable in multi-party interviews or panel discussions.

Content segmentation enhances the precision of subsequent analysis steps, allowing AI to focus on relevant sections and reducing noise in the data.

Creating Templates for Extracting Themes, Sentiments, and s

Structured templates streamline the extraction of critical insights from interview transcripts, ensuring consistency and efficiency. These templates serve as frameworks for automating the identification of recurring themes, emotional tones, and salient s. Incorporating predefined categories increases the reproducibility of analysis and facilitates comparative studies across multiple transcripts.

| Component | Description | Example |

|---|---|---|

| Theme Extraction | Identify overarching topics discussed by the interviewee, categorizing statements into relevant themes such as ‘Customer Satisfaction’, ‘Product Features’, or ‘Market Trends’. | “The main concern expressed was the need for faster delivery times,” indicating a theme related to logistics efficiency. |

| Sentiment Analysis | Assess the emotional tone of statements, classifying sentiments as positive, negative, or neutral, possibly with intensity scores. | “I am disappointed with the recent service delays,” reflects negative sentiment. |

| Identification | Extract high-frequency or significant words and phrases that capture core concepts or issues within the transcript. | s like ‘latency’, ‘support’, ‘pricing’ help identify focal points of discussion. |

Designing clear templates ensures systematic extraction, making large-scale analysis more manageable and insightful.

Designing Procedures for Identifying Patterns and Recurring Topics Across Transcripts

Detecting patterns and recurring topics across multiple transcripts provides valuable insights into consistent themes, emerging issues, or common sentiments within a dataset. Structured procedures enable scalable analysis, supporting decision-making, strategic planning, or product development.

- Data preparation: Compile and standardize transcripts to ensure uniform formatting and language use, facilitating better AI performance.

- Batch processing: Use NLP pipelines to process large volumes of transcripts simultaneously, applying content segmentation and initial theme extraction.

- Pattern recognition algorithms: Deploy clustering techniques such as K-means or hierarchical clustering on extracted features like s, sentiment scores, or thematic labels.

- Visualization tools: Use heatmaps, word clouds, or network graphs to visualize recurring topics and their relationships, enabling quick pattern recognition.

- Iterative refinement: Continuously refine models based on validation with manual annotations or domain knowledge, improving pattern detection precision.

Systematic analysis of patterns across transcripts reveals insights that might remain hidden in isolated examination, informing strategic actions and highlighting prevalent issues or opportunities.

Utilizing HTML Tables for Data Presentation

Effective presentation of interview transcript analysis results is crucial for clear interpretation and decision-making. HTML tables serve as a valuable tool to organize, categorize, and compare insights derived from interview data, especially when utilizing AI-driven analysis. They facilitate a structured display that enhances readability and enables stakeholders to easily review key themes, participant responses, and contextual details within a unified format.

Incorporating HTML tables into your analysis workflow allows for systematic organization of complex qualitative data. By structuring insights into tabular formats, analysts can identify patterns, compare responses across different participants, and highlight recurring themes efficiently. This approach not only improves clarity but also supports better communication of findings to diverse audiences, including clients, team members, or academic reviewers.

Developing HTML Table Structures for Categorized Data

Designing effective HTML tables involves creating responsive and well-organized structures that accommodate various data categories such as themes, responses, and participant details. The goal is to enable seamless review and comparison, regardless of device or screen size. Properly formatted tables should include informative headers, clear rows, and columns, with attention to accessibility and readability.

- Use

<table>tags to define the table structure, ensuring each table includes a<thead>for headers and<tbody>for data rows. - Employ descriptive column headers such as “Theme,” “Participant ID,” “Response,” and “Response Date” to clarify data categories.

- Ensure columns are responsive by applying CSS styles like

max-widthandoverflow-wrapto maintain readability on various devices.

Organizing Insights for Comparison and Review

To facilitate effective comparison, organize insights into tables that group similar themes or responses. This enables stakeholders to analyze patterns across different participants or interview sessions at a glance. Well-structured tables help identify consistencies, discrepancies, and emerging trends, supporting informed decision-making.

- Create separate tables for each major theme or category, or include multiple themes within a single table with clear row divisions.

- Highlight key responses using bold formatting or background colors to draw attention to significant insights.

- Include a column for participant details such as age, role, or demographic information, providing context for responses and aiding in cross-group analysis.

“Structured data presentation using HTML tables enhances clarity, comparison, and insight extraction, making complex qualitative analysis accessible and actionable.”

Here is an illustrative example of an HTML table designed to display categorized interview data:

| Theme | Participant ID | Response | Response Date |

|---|---|---|---|

| Customer Satisfaction | P001 | “The service was prompt and courteous.” | 2023-10-12 |

| Product Feedback | P002 | “The product meets my expectations, but the setup process was complicated.” | 2023-10-13 |

| Service Improvements | P003 | “Adding more payment options would enhance convenience.” | 2023-10-14 |

This table format enables easy comparison across different themes, responses, and participants, making the analysis process more efficient and transparent.

Case Studies and Practical Applications

Applying AI-driven analysis of interview transcripts in real-world scenarios demonstrates the significant value and efficiency gains achievable through automation. These case studies illuminate how organizations leverage AI to enhance decision-making, streamline workflows, and extract deeper insights from qualitative data. Understanding these practical applications provides a clearer perspective on the tangible benefits and potential challenges encountered when integrating AI into interview analysis processes.

By examining various industries and contexts, stakeholders can better appreciate best practices, common pitfalls, and innovative solutions that have emerged from actual implementation experiences. This section highlights key examples where AI has transformed the approach to interview data, offering a roadmap for successful deployment in diverse organizational settings.

Real-World Scenarios of AI-Driven Transcript Analysis

Organizations across sectors such as market research, human resources, healthcare, and customer service have adopted AI-powered transcript analysis to enhance their decision-making processes. These case studies underscore the transformative impact of AI on qualitative data interpretation, from identifying nuanced sentiment shifts to uncovering emerging themes that might be overlooked manually.

| Scenario | Challenge | AI Solution | Outcome |

|---|---|---|---|

| Customer Service Feedback Analysis | High volume of customer interactions made manual analysis impractical and slow. | AI algorithms processed thousands of transcripts to detect common complaints, sentiment trends, and areas for service improvement. | Faster response to customer needs, targeted training initiatives, and improved satisfaction scores. |

| Recruitment and Candidate Evaluation | Manual review of interview responses was time-consuming and subject to bias. | Natural language processing (NLP) analyzed candidate responses for key competencies, cultural fit, and consistency. | More objective candidate assessments and reduced hiring cycle times. |

| Healthcare Patient Interviews | Difficulty in extracting relevant clinical and emotional information from lengthy interviews. | AI-driven transcript analysis identified critical symptoms, emotional cues, and patient concerns for better diagnosis and care planning. | Enhanced clinical insights and personalized patient care outcomes. |

Comparison of Manual and Automated Transcript Analysis

Manual analysis of interview transcripts is often labor-intensive, time-consuming, and susceptible to human biases. While it allows for nuanced interpretation and context-aware insights, the scale at which it can be performed is limited, especially with large datasets. Conversely, automated AI approaches offer speed, consistency, and scalability, enabling the processing of vast amounts of data with minimal human intervention. These benefits significantly enhance the throughput and reliability of analysis, particularly in high-volume environments.

“Automation does not replace the need for human judgment but complements it by handling repetitive tasks and revealing patterns otherwise difficult to detect.”

Empirical comparisons indicate that AI-based analysis can reduce processing time by up to 90% compared to manual methods, while also maintaining, or even improving, accuracy in identifying key themes and sentiments. Nevertheless, effective implementation requires initial investment in training, model tuning, and validation to ensure outputs align with organizational standards and contextual nuances.

Lessons Learned and Best Practices from Implementation

Organizations that have successfully integrated AI for interview transcript analysis share common lessons and strategic approaches. These insights serve as valuable guidelines for future projects:

- Start with clear objectives: Defining specific goals helps tailor AI solutions to meet organizational needs effectively.

- Invest in quality data preparation: Ensuring transcripts are accurately transcribed and formatted improves AI performance and analysis reliability.

- Choose appropriate AI tools and models: Selecting models suited for your domain, such as sentiment analysis or thematic clustering, enhances relevance and accuracy.

- Maintain human oversight: Combining AI outputs with expert review ensures contextual appropriateness and mitigates potential errors.

- Iterate and refine: Continuous feedback and model tuning improve system accuracy over time, adapting to evolving data and organizational contexts.

Implementing AI for interview analysis often reveals unforeseen challenges like data privacy concerns, integration complexities, and the need for staff training. Addressing these proactively through clear policies, stakeholder engagement, and ongoing training fosters smoother adoption. Ultimately, the most successful implementations balance technological investment with strategic planning and human expertise to maximize insights and impact.

Challenges and Considerations in AI Transcript Analysis

Analyzing interview transcripts with AI technology offers numerous advantages, including efficiency and consistency. However, it also presents several challenges that must be carefully addressed to ensure accurate and ethical outcomes. Recognizing the potential pitfalls and implementing appropriate strategies is essential for reliable analysis and maintaining the integrity of sensitive data.AI-powered transcript analysis can be compromised by issues such as data bias, misinterpretation of context, and inaccuracies in transcription.

These challenges can lead to skewed insights or misinformed decisions if not properly managed. Additionally, ethical considerations become paramount when dealing with personal or confidential interview data, requiring thoughtful approaches to privacy and consent.

Data Bias and Misinterpretation

Variability in training data can introduce bias, which may manifest as overrepresentation or underrepresentation of certain perspectives. This bias can influence AI outputs, leading to skewed interpretations that do not accurately reflect the interviewees’ intentions or experiences. Misinterpretation of context, sarcasm, or cultural nuances can further complicate analysis, resulting in superficial or incorrect conclusions.To mitigate these issues, it is crucial to use diverse and representative datasets during model training.

Employing human-in-the-loop validation processes helps identify and correct biased or misinterpreted outputs. Regularly updating models with new, relevant data ensures ongoing adaptation to evolving language patterns and cultural contexts.

Accuracy and Validation of AI Outputs

Ensuring the reliability of AI-derived insights requires systematic validation strategies. This involves cross-verifying AI outputs with manual review by subject matter experts or trained analysts. Establishing benchmarks and performance metrics, such as precision, recall, and F1 scores, can help quantify the accuracy of the analysis process.Implementing feedback mechanisms allows continuous improvement of AI models. For example, after initial analysis, analysts can flag inaccuracies or ambiguities, enabling the model to learn from these corrections over time.

Maintaining transparency about the limitations of AI tools fosters trust and facilitates better decision-making.

Ethical Considerations in Analyzing Sensitive Data

Handling interview data that contains personal, confidential, or sensitive information necessitates strict adherence to ethical standards. Securing informed consent from interviewees before data collection is fundamental. During analysis, data should be anonymized to protect individual identities, especially when sharing findings publicly or with third parties.Respecting privacy rights involves implementing secure data storage solutions and restricting access to authorized personnel. Ethical review boards or compliance with data protection regulations, such as GDPR or HIPAA, provide additional safeguards.

It is vital to balance the benefits of AI analysis with the responsibility to uphold confidentiality and respect for interviewees’ autonomy and dignity.

Concluding Remarks

In conclusion, learning how to analyze interview transcripts with AI equips users with powerful tools to interpret qualitative data more effectively. Whether for research, HR, or market analysis, this methodology streamlines workflows while providing richer, more nuanced insights. Embracing AI-driven analysis can transform the way interview data informs strategic decisions and enhances understanding across various fields.